03.31.2009 15:29

transporting AIS messages over the internet

Back in January, I talked about

AIS Encoding with JSON and

AIS Binary Message queuing format for the USCG

Fetcher-Formatter. Here is a supper simple format for AIS messages

that is more like the JSON format that is a lot simpler than the

Maritime Domain Awareness (MDA) Data Sharing Community of Interest

(DS COI) by Tollefson et al.: Data

Management Working Group Spiral 2 Vocabulary Handbook Version 2.0.2

Final Release 15 November 2007 [PDF]

Being that this is XML, you can drop in other things with easy. For example, you could include the binary 011011 representation, AIS channel (A or B), a BBM or ABM command to transmit the message, the VDM message, any of the other information that was in the Fetcher-Formatter queuing metadata (bounding box, priority), or what ever else you need.

Being that this is XML, you can drop in other things with easy. For example, you could include the binary 011011 representation, AIS channel (A or B), a BBM or ABM command to transmit the message, the VDM message, any of the other information that was in the Fetcher-Formatter queuing metadata (bounding box, priority), or what ever else you need.

<aismsg msgnum="1" timestampt="1238525354.12">

<field name="MessageId">1</field>

<field name="RepeatIndicator">1</field>

<field name="NavigationStatus">6</field>

<!-- and so forth... -->

</aismsg>

<!-- and -->

<aismsg msgnum="8" timestampt="1238525354.12">

<field name="MessageId">8</field>

<field name="RepeatIndicator">1</field>

<field name="UserID"></field> <!-- MMSI - use the same name as in Pos 1-3 messages -->

<field name="Spare">0</field>

<field name="Notice Description" alt="Restricted Area: Entry prohibited">35</field> <!-- alt attribute modeled off of HTML -->

<field name="start_day">12</field>

<field name="start_hour">16</field>

<field name="start_min">0</field>

<field name="duration">4000</field> <!-- optional units attribute? -->

<field name="message_identifier">643</field>

<!-- start of first sub area ... do you include grouping tags? -->

<group id="0" name="circle">

<field name="area_shape" alt="circle">0</field>

<field name="scale_factor">.5</field>

<field name="lontitude">-50.21234</field>

<field name="latitude">10.3457</field>

<field name="radius">143</field> <!-- Is this the scaled for the VDL or the actual number for display? -->

<field name="spare">0</field>

</group>

<!-- and so forth... -->

</aismsg>

03.31.2009 11:21

Cod in an open ocean fish cage

Here is a video by Roland showing the

fish swimming in a UNH fish cage in the open ocean.

03.31.2009 11:00

NH Fish Cages

John at gCaptain posted this nice

article about the NH/ME area:

Aquapod Subsea Fish Farms - Bizarre Maritime Technology, which

talks about Ocean Farm Technologies open ocean aquaculture project

here in the NH/ME area.

I've been meaning to upload this video by Roland for quite a while...

I've been meaning to upload this video by Roland for quite a while...

03.30.2009 17:02

Agressive uses of maps - what is a safe use of a map?

Issues that apply to Coast Pilot /

Sailing Directions guides and marine charts too... Making

Digital Maps More Current and Accurate [Directions Magazine]

... Some interactive solutions have made it to market. One example is the EU-backed ActMAP project which developed mechanisms for online, incremental updates of digital map databases using wireless technology. The system helps to shorten the time span between updates significantly. Nevertheless, there is still room for improvement in terms of detecting map errors, changes in the real world, or monitoring highly dynamic events like local warnings automatically. Addressing these ever-changing realities requires a radical rethink of the applied methodology. ... The assumption behind ActMAP and other systems is that the supplier is responsible for all updates. However, this approach overlooks a valuable source of information: the motorists who use the navigation systems themselves. If anomalies found by road users could be automatically sent to the supplier, this could be used as a valuable supplementary source of information to iron out irregularities in maps and beam them back to the users. ...

03.30.2009 12:06

Network Time Protocol on Windows

Here are some notes on installing the

client side of the Network Time Protocol (NTP) on MS Windows.

Windows comes with the W32Time service, but it has repeatedly been

shown to be unhelpful. Wikipedia on

NTP:

I work in the continental US, so I selected "United States of Amera". I also added the local UNH time server wilmot. Note - the UNH time server is overly great (stratum 2) and why is it not called time, ntp, or even bigben? I turned on iburst mode. Is that a good thing?

I setup ntp to run as the user "ntp".

You can restart the service from the start menu. Or you can install the free Meinberg NTP Time Server Monitor.

Run the Time Server Monitor:

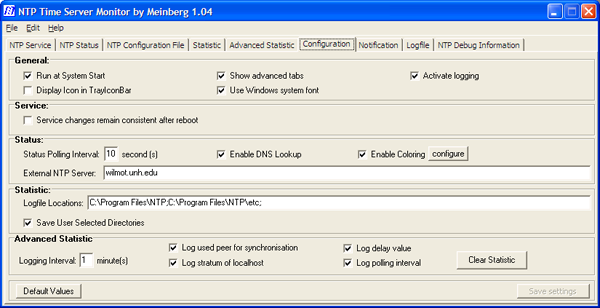

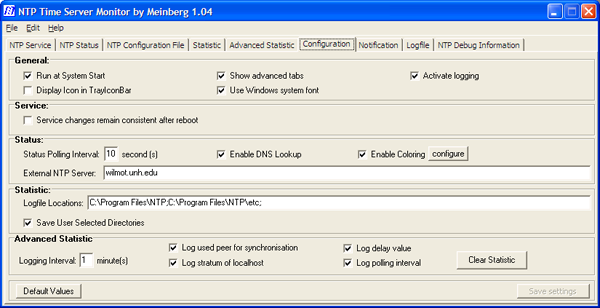

Go to the configuration tab and select:

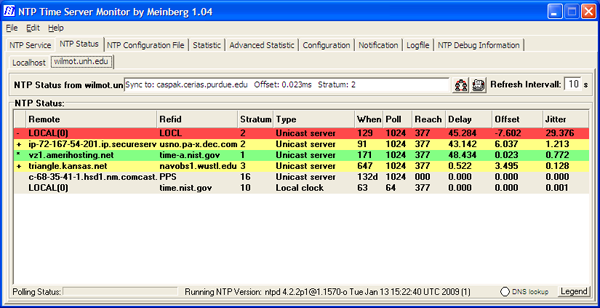

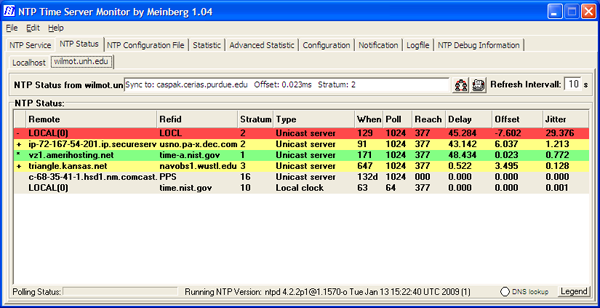

Now you can check out how the time servers are doing.

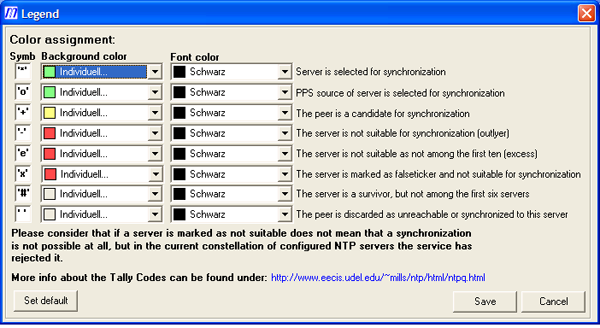

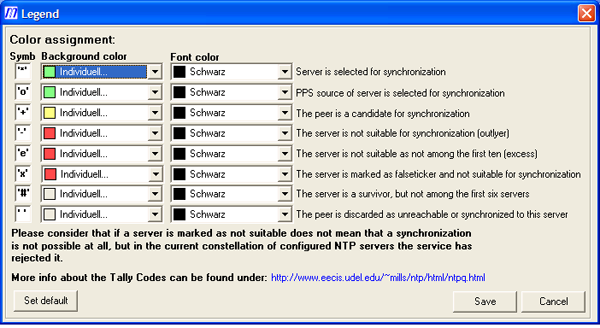

Here is the legend to go with the above display:

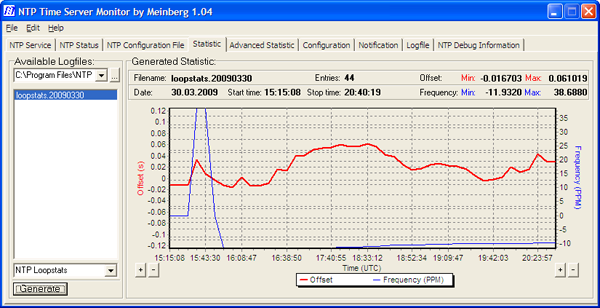

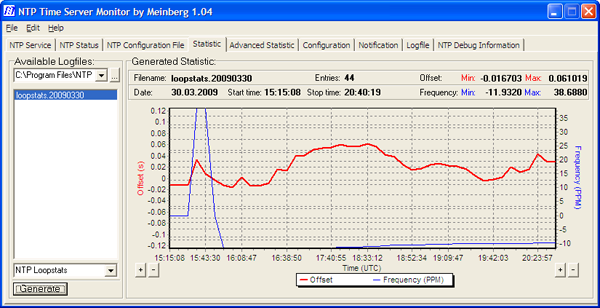

Enable statistics generation so you can see how the system is performing. It needs to run for a while before the graph is interesting.

Here is the final ntp.conf file that I generated. I added a couple extra ntp pool servers.

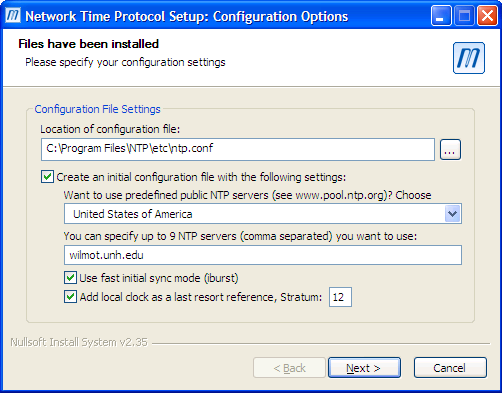

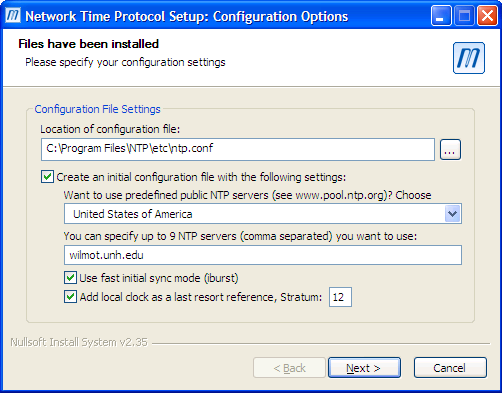

However, the Windows Time Service cannot maintain the system time more accurately than about a 1-2 second range. Microsoft "[does] not guarantee and [does] not support the accuracy of the W32Time service between nodes on a network. The W32Time service is not a full-featured NTP solution that meets time-sensitive application needs."First install Meinberg's free NTP. When the configuration part starts, select the correct pool for your location.

I work in the continental US, so I selected "United States of Amera". I also added the local UNH time server wilmot. Note - the UNH time server is overly great (stratum 2) and why is it not called time, ntp, or even bigben? I turned on iburst mode. Is that a good thing?

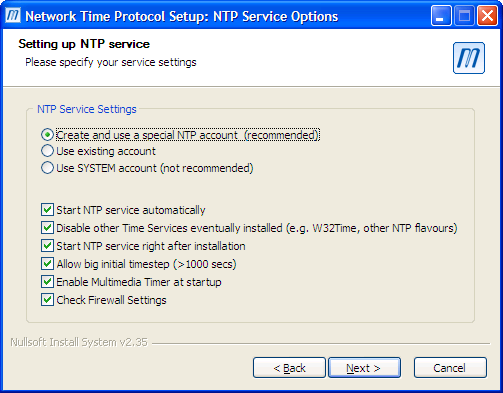

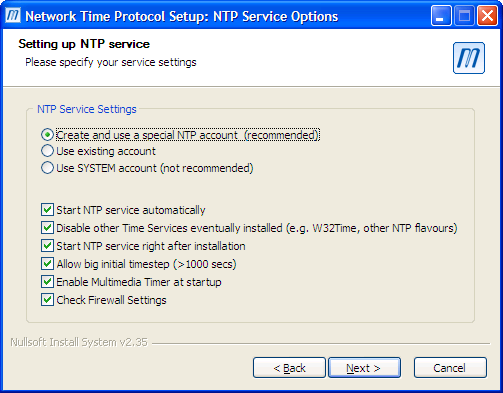

I setup ntp to run as the user "ntp".

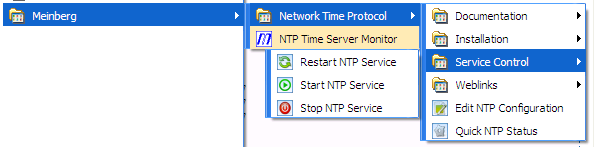

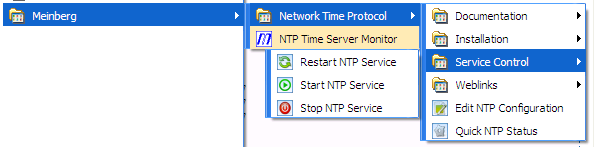

You can restart the service from the start menu. Or you can install the free Meinberg NTP Time Server Monitor.

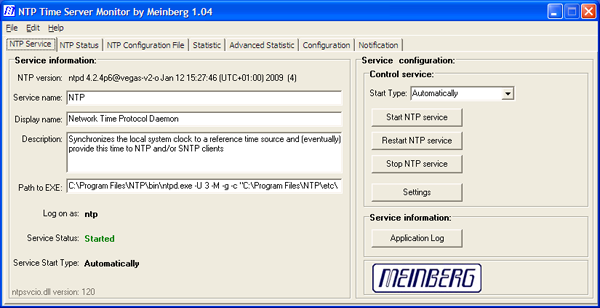

Run the Time Server Monitor:

Go to the configuration tab and select:

Show advanced tabs Activate logging Enable DNS Lookup External NTP Server: wilmot.unh.edu (if you are at UNH)

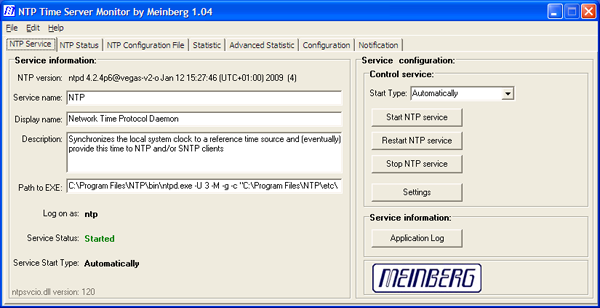

Now you can check out how the time servers are doing.

Here is the legend to go with the above display:

Enable statistics generation so you can see how the system is performing. It needs to run for a while before the graph is interesting.

Here is the final ntp.conf file that I generated. I added a couple extra ntp pool servers.

server 127.127.1.0 # but it operates at a high stratum level to let the clients know and force them to # use any other timesource they may have. fudge 127.127.1.0 stratum 12 # Use a NTP server from the ntp pool project (see http://www.pool.ntp.org) # Please note that you need at least four different servers to be at least protected against # one falseticker. If you only rely on internet time, it is highly recommended to add # additional servers here. # The 'iburst' keyword speeds up initial synchronization, please check the documentation for more details! server 0.us.pool.ntp.org iburst server 1.us.pool.ntp.org iburst server 2.us.pool.ntp.org iburst # Extra servers added by Kurt server 3.us.pool.ntp.org minpoll 12 maxpoll 17 server 0.north-america.pool.ntp.org minpoll 12 maxpoll 17 server 1.north-america.pool.ntp.org minpoll 12 maxpoll 17 server 2.north-america.pool.ntp.org minpoll 12 maxpoll 17 # Use specific NTP servers server wilmot.unh.edu iburst ########################################################### #Section insert by NTP Time Server Monitor 3/30/2009 enable stats statsdir "C:\Program Files\NTP\etc\" statistics loopstats ###########################################################If you are considering setting up your own Stratum 1 NTP server, Meinberg and Symmetricom make NTP appliances - 1U rack mount systems that you just plug in the GPS antenna.

03.29.2009 13:43

UNH Greenhouse

Friday and Saturday were the UNH

Greenhouse open house. I've been going the last three years. I

picked up thyme and dill plants and ran into my master gardner

neighbor Genie.

03.29.2009 13:22

Zones of Confidence on charts (CATZOCs)

The Case of the Unwatched ZOC - Vessel Groundings Due To

Navigational Chart Errors [gCaptain]

... Says Couttie: "If you go through an an area which is poorly surveyed or unsurveyed then, regardless of what's shown on the chart, you really have to think whether you should be there at all. If you do, then it's important to check the chart source data diagram or Category Zones of Confidence, CATZOCs, to see just how reliable the chart, whether paper or ECDIS, actually is. ECDIS isn't necessarily going to be more accurate than paper." ...The podcast is here: The Case Of The Unwatched ZOCs [Bob Couttie]

03.29.2009 06:59

ONR and infrared marine mammal detection

Infra-red detectin of Marine Mammals from ship-based platforms -

ONRBAA09-015

The Office of Naval Research (ONR) is interested in receiving proposals for research into the use of infra-red (IR) band technology as a potential means of detecting marine mammals. There are significant concerns about the effects of anthropogenic sound (e.g., use of military sonar, seismic research and exploration, marine construction, shipping) on marine mammals. As a means of minimizing interactions between anthropogenic acoustic activities and marine mammals, human observers are often employed to detect marine mammals and initiate mitigation strategies when mammals are observed sufficiently close to active sound sources. Visual observations, the current standard, are limited in their effective use to daytime, comparatively clear atmospheric conditions and relatively low sea states. Additionally, alternative detection methods currently being used to detect marine mammals include 1) passive acoustics, which is dependent on vocalizing animals; and 2) active acoustics, which has been reserved for research, releases energy into the water and must be assessed for possible adverse effects. Because all these methods have their shortcomings, additional alternative and/or complementary marine mammal detection methods are being developed, including use of radar and IR. Our goal is to evaluate the efficacy of IR imagery (still and/or video) for near-real-time detection of marine mammals from ship-based platforms. Ultimately IR detection might be optimized by use of high-incident angle views of the sea surface via unmanned aerial vehicles (or other platforms). However, to minimize initial investment costs while we evaluate the potential of this detection technology we will not here invest in studies using a costly autonomous flying platform. Rather we seek to invest in research using ship-based, more oblique-angle techniques coupled with visual observation of marine mammals, which offers validation opportunities. At the discretion of the proposer, ship-based measurements might utilize tethered balloons, kites or tall masts to increase incident viewing angles of the imagery system, and might be preceded by land (cliff)-based evaluations that could cost effectively develop and test the proposed technology. Our interest is in developing and testing a small, light weight, low power IR imagery (still and/or video) system that may be adaptable to diverse platforms. Therefore the focus of this BAA is on uncooled (microbolometric) IR systems, and not cooled systems. ...See also: Marine Mammals & Bioogical Oceanography [ONR]

03.27.2009 07:00

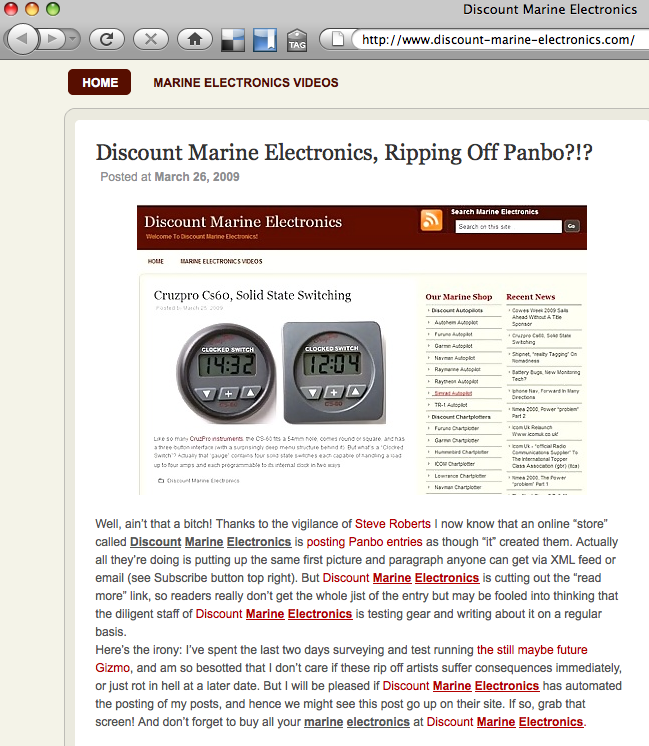

Discount Marine Electronics - Ripping off Panbo

In the catagory of "Things not to

do": Ben Ellison was tolk that Discount Marine Electronics ripped

off an article from his

Panbo site. Turns out that they automated the rip off process.

Here is Ben's comments about Discount Marine Electronics embedded

in their web page. Remember not to put links to sites like this.

Just write out the URLs so that we are not helping their search

engine ranking.

The web works better when people play nice.

The web works better when people play nice.

03.27.2009 06:28

django database integrity

In

Django Tip: Controlling Admin (Or Other) Access [Malcolm

Tredinnick], he says this:

A Warning About Database Changes If you're making any database changes, particularly using django-admin.py, make sure to make them using the settings file that includes all of your applications. The reason for this is that Django sanitises the Content Type data when it loads any new tables, removing entries that don't belong to applications it knows about. So if changes were made to the content types using a settings file that didn't contain the admin application in the INSTALLED_APPS list, the admin's content type table entry would be removed. This wouldn't be a good idea.This doesn't sound good. Does it imply that I had better have models for all tables in my database? I often use django admin to look at part of a database. I haven't tried modifying a database that has add additional tables that django is not aware of, but I had better watch out (unless I'm not understanding what he means).

03.26.2009 06:39

New django applications - The Washington Times

I got to listen to This

Week In Django (TWID) 55 yesterday while driving for work.

There was an exciting segment in the Community Catchup

section:

Washington Times releases open source projects

Washington Times releases open source projects

django-projectmgr: a source code repository manager and issue tracking

application. It allows threaded discussion of bugs and features,

separation of bugs, features and tasks and easy creation of source

code repositories for either public or private consumption.

django-supertagging: an interface to the Open Calais service for

semantic markup.

django-massmedia: a multi-media management application. It can create

galleries with multiple media types within, allows mass uploads

with an archive file, and has a plugin for fckeditor for embedding

the objects from a rich text editor.

django-clickpass: an interface to the clickpass.com OpenID service

that allows users to create an account with a Google, Yahoo!,

Facebook, Hotmail or AIM account.

03.25.2009 23:11

Kings Point training simulator

Today, I sat in with a NOAA team

while they were training on a bridge simulator at Kings

Point.

Working through all the different issues provided by the instructor controlling the simulator:

The shift hand over...

Paper charts...

Working through all the different issues provided by the instructor controlling the simulator:

The shift hand over...

Paper charts...

03.23.2009 09:23

The beginning of spring

Just today, I saw bulbs finally

pushing through the cold ground.

03.22.2009 16:26

Congressional Report - Navy sonar and whales

Whales and Sonar:

Environmental Exemptions for the Navy's Mid-Frequency Active Sonar

Training [opencrs] February 18, 2009

Mid-frequency active (MFA) sonar emits pulses of sound from an underwater transmitter to help determine the size, distance, and speed of objects. The sound waves bounce off objects and reflect back to underwater acoustic receivers as an echo. MFA sonar has been used since World War II, and the Navy indicates it is the only reliable way to track submarines, especially more recently designed submarines that operate more quietly, making them more difficult to detect. Scientists have asserted that sonar may harm certain marine mammals under certain conditions, especially beaked whales. Depending on the exposure, they believe that sonar may damage the ears of the mammals, causing hemorrhaging and/or disorientation. The Navy agrees that the sonar may harm some marine mammals, but says it has taken protective measures so that animals are not harmed. MFA training must comply with a variety of environmental laws, unless an exemption is granted by the appropriate authority. Marine mammals are protected under the Marine Mammal Protection Act (MMPA) and some under the Endangered Species Act (ESA). The training program must also comply with the National Environmental Policy Act (NEPA), and in some cases the Coastal Zone Management Act (CZMA). Each of these laws provides some exemption for certain federal actions. The Navy has invoked all of the exemptions to continue its sonar training exercises. Litigation challenging the MFA training off the coast of Southern California ended with a November 2008 U.S. Supreme Court decision. The Supreme Court said that the lower court had improperly favored the possibility of injuring marine animals over the importance of military readiness. The Supreme Courts ruling allowed the training to continue without the limitations imposed on it by other courts.

03.21.2009 09:23

Blender

Worth a read of the whole article:

Blending in - CGenie inerview Blender - Interview Ton

Rossendaal.

... Ton: Blender's much disputed UI hasn't been an unlucky accident coded by programmers but was developed almost fifteen years ago in-house by artists and designers to serve as their daily production tool. This explains why it's non-standard, using concepts based on advanced control over data (integrated linkable database) and the fastest workflow possible (subdivision based UI layouts, non-blocking and non-modal workflow). Blender uses concepts similar to one of the world's leading UI designers Jef Raskin. When I read his book "Humane Interface" five years ago, it felt like coming home. His son Aza Raskin has continued his work with great results. He's now lead UI design at Mozilla and heads up his own startup. If you check on his work on humanized.com you can see concepts we're much aligned with. ... What Blender especially failed in was mostly a result of evolutionary dilution, coming from some laziness and a pragmatic 'feature over functionality' approach. Making good user interfaces is not easy, and people don't always have time to work on that. Moreover, because of our fast development, it's not always clear to new users whether they are trying to use something that's brilliant or is still half working, or simply broken by design! For me this mostly explains the tough initial learning curve for Blender, or why people just give up on it. ...

03.20.2009 23:00

New Paleomag book by Lisa Tauxe

Just out:

Web Edition of: Essentials of Paleomagnetism: Web Edition 1.0 (March 18, 2009) by Lisa Tauxe with contributions from: Subir K. Banerjee, Robert F. Butler and Rob van der Voo

Web Edition of: Essentials of Paleomagnetism: Web Edition 1.0 (March 18, 2009) by Lisa Tauxe with contributions from: Subir K. Banerjee, Robert F. Butler and Rob van der Voo

03.20.2009 10:58

Unfriendly government messages

This is the first time I've seen something like this. The web page it came from doesn't have any access control on it (other than showing this as a giant alert box). Bold added by me. This comes when you click on the historical data request from here:

NAIS Data Feed and Data Request Terms and Conditions Disclaimer

Warning!! Title 18 USC Section 1030 Unauthorized access is prohibited by Title 18 USC Section 1030. Unauthorized access may also be a violation of other Federal Law or government policy, and may result in criminal and/or administrative penalties. Users shall not access other users' system files without proper authority. Absence of access controls IS NOT auhtorization for access! USCG information systems and related equipment are intended for communication, transmission, processing and storage of U.S. Government information. These systems and equipment are subject to monitoring to ensure proper functioning, to protect against improper or unathorized use or access, and to verify the presence or performance of applicable security features or procedures, and for other like purposes. Such security monitoring may result in the acquisition, recording, and analysis of all data being communicated, transmitted, processed or stored in this system by a user. If security monitoring reveals evidence of possible criminal activity, such evidence may be provided to law enforcement personnel. Use of this system constitutes consent to such security monitoring. Title 5 USC Section 552A This System contains information protected under the provisions of the Privacy Act of 1974 (5 U.S.C. 552a). Any privacy information displayed on the screen or printed must be protected from unauthorized disclousre. Employees who violate privacy safeguards may be subject ot disciplinary actions, a fine of up to $5,000 per disclosure, or both. Information in this system is provided for use in official Coast Guard business only. Requests for information from this system from persons or organizations outside the U.S. Coast Guard should be forwarded to Commandant (G-MRI-1) or Commandant (G-OCC). Warning!!

03.19.2009 12:19

GEOS 3.1.0 released

From: Paul Ramsey

Subject: [geos-devel] GEOS 3.1.0

To: GEOS Development List

PostGIS Users Discussion

The GEOS team is pleased to announce that GEOS 3.1.0 has been pushed

out the door, cold, wet, trembling and looking for love.

http://download.osgeo.org/geos/geos-3.1.0.tar.bz2

Version 3.1.0 includes a number of improvements over the 3.0 version:

- PreparedGeometry operations for very fast predicate testing.

- Intersects()

- Covers()

- CoveredBy()

- ContainsProperly()

- Easier builds under MSVC and OpenSolaris

- Thread-safe CAPI option

- IsValidReason added to CAPI

- CascadedUnion operation for fast unions of geometry sets

- Single-sided buffering operation added

- Numerous bug fixes.

http://trac.osgeo.org/geos/query?status=closed&milestone=3.1.0&order=priority

Users of the upcoming PostGIS 1.4 will find that compiling against

GEOS 3.1 provides them access to some substantial performance

improvements and some extra functions.

03.18.2009 17:08

Dave Sandwell's compensation from Google

I was tald that Dave Sandwell

received compensation from Google for the bathymetery they used. I

looked up the source that was quoted. He was not compensated. I

have licenses Google Earth Pro and Sketchup Pro from Google that I

don't pay for, as do many other educators.

Seabed images create waves [nature news]

Seabed images create waves [nature news]

... So far, all that Sandwell has received in return for his work is a year's worth of Google Earth Pro software - normal cost US$400 - for his classes. He is now applying to a Google foundation for funds to support a postdoctoral research position in oceanography.

03.18.2009 10:37

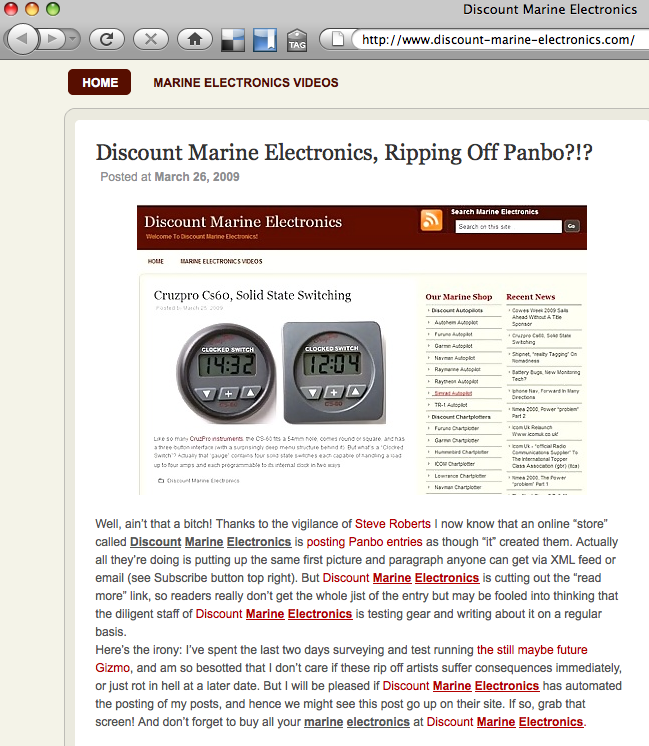

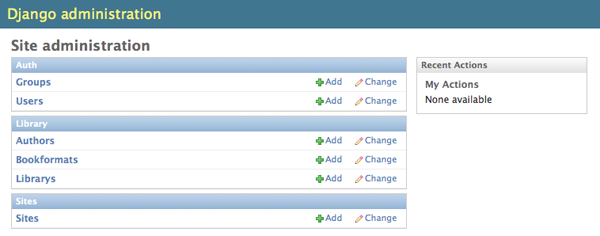

Django 1.0.2 with mysql library

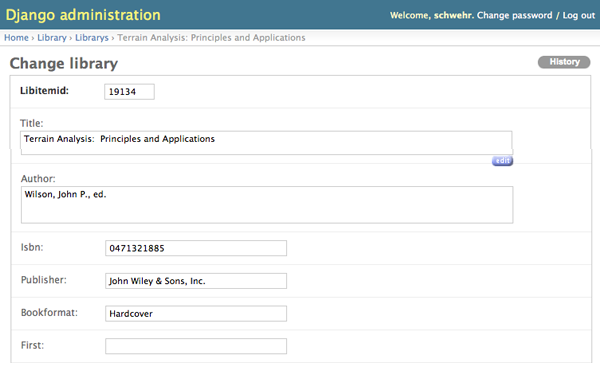

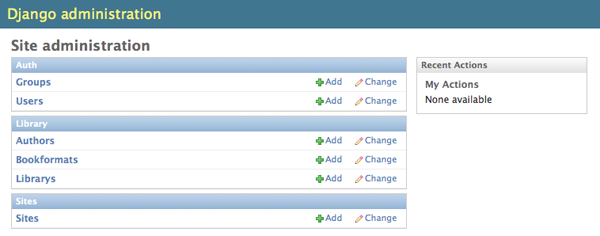

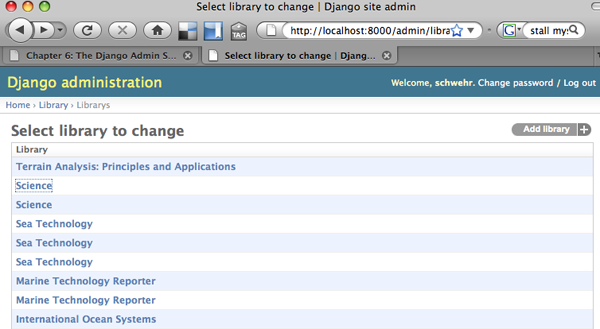

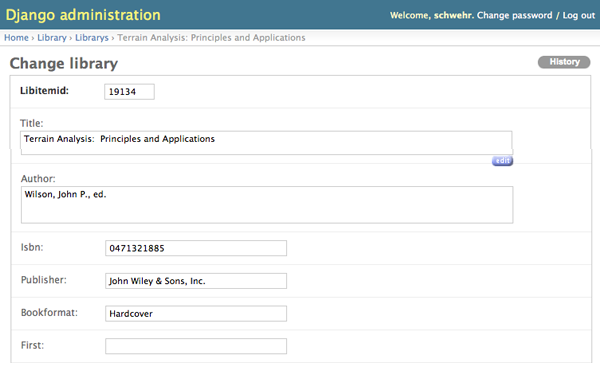

Here is taking a quick look at the

mysql database with Django. This is using the library database dump

from before. I used the draft version of the 2.0 Django Book. Turns out this

database isn't very interested. All but the primary keys are

varchar, so I can't show cool foreign key stuff. You will need fink

unstable for this.

Note that the database shown here is not how I would design things. It needs foreign keys for authors and others, date types for dates, and a few other things before it would be something I would want to use.

And for reference, here are the CREATE statements for the tables grabbed from the dump.

Note that the database shown here is not how I would design things. It needs foreign keys for authors and others, date types for dates, and a few other things before it would be something I would want to use.

% fink install django-py26 mysql-python-py26Now start working on the django project:

% django-admin.py startproject ccom_library % cd ccom_library % python manage.py startapp library % echo $USERNow edit settings.py and change these lines:

DATABASE_ENGINE = 'mysql' DATABASE_NAME = 'library' DATABASE_USER = 'YourShortUsernameHere' # what $USER returnedTo the INSTALLED_APPS section, add these lines:

'django.contrib.admin',

'ccom_library.library',

Now edit urls.py so that it looks like this:

from django.conf.urls.defaults import *

from django.contrib import admin

admin.autodiscover()

urlpatterns = patterns('',

(r'^admin/(.*)', admin.site.root),

)

Now get the models from the database.

% python manage.py inspectdb > library/models.pyIn my case, I had to edit the resulting library/models.py to remove some old crusty tables. Here is the models.py:

# This is an auto-generated Django model module.

# You'll have to do the following manually to clean this up:

# * Rearrange models' order

# * Make sure each model has one field with primary_key=True

# Feel free to rename the models, but don't rename db_table values or field names.

#

# Also note: You'll have to insert the output of 'django-admin.py sqlcustom [appname]'

# into your database.

from django.db import models

class Author(models.Model):

authorid = models.IntegerField(primary_key=True)

fullname = models.CharField(max_length=765, blank=True)

firstname = models.CharField(max_length=150, blank=True)

middlename = models.CharField(max_length=150, blank=True)

lastname = models.CharField(max_length=150, blank=True)

surname = models.CharField(max_length=15, blank=True)

organization = models.CharField(max_length=300, blank=True)

class Meta:

db_table = u'author'

class Bookformat(models.Model):

bfid = models.IntegerField(primary_key=True)

bookformat = models.CharField(max_length=135, blank=True)

class Meta:

db_table = u'bookformat'

class Library(models.Model):

libitemid = models.IntegerField(primary_key=True)

title = models.TextField(blank=True)

author = models.TextField(blank=True)

isbn = models.CharField(max_length=192, blank=True)

publisher = models.CharField(max_length=384, blank=True)

bookformat = models.CharField(max_length=135, blank=True)

first = models.CharField(max_length=6, blank=True)

signed = models.CharField(max_length=6, blank=True)

pubdate = models.CharField(max_length=96, blank=True)

pubplace = models.CharField(max_length=192, blank=True)

rating = models.CharField(max_length=36, blank=True)

condition = models.CharField(max_length=36, blank=True)

category = models.CharField(max_length=384, blank=True)

value = models.CharField(max_length=18, blank=True)

copies = models.IntegerField(null=True, blank=True)

read = models.CharField(max_length=6, blank=True)

print_field = models.CharField(max_length=6, db_column='print', blank=True) # Field renamed because it was a Python reserved word. Field name made lowercase.

htmlexport = models.CharField(max_length=6, blank=True)

comment = models.TextField(blank=True)

dateentered = models.CharField(max_length=36, blank=True)

source = models.CharField(max_length=384, blank=True)

cart = models.CharField(max_length=6, blank=True)

ordered = models.CharField(max_length=6, blank=True)

lccn = models.CharField(max_length=36, blank=True)

dewey = models.CharField(max_length=36, blank=True)

copyrightdate = models.CharField(max_length=96, blank=True)

valuedate = models.CharField(max_length=72, blank=True)

location = models.CharField(max_length=135, blank=True)

pages = models.IntegerField(null=True, blank=True)

series = models.TextField(blank=True)

keywords = models.TextField(blank=True)

dimensions = models.CharField(max_length=192, blank=True)

author2 = models.CharField(max_length=384, blank=True)

author3 = models.CharField(max_length=384, blank=True)

author4 = models.CharField(max_length=384, blank=True)

author5 = models.CharField(max_length=384, blank=True)

author6 = models.CharField(max_length=384, blank=True)

isbn13 = models.CharField(max_length=96, blank=True)

ccomlocid = models.CharField(max_length=30, blank=True)

issn = models.CharField(max_length=60, blank=True)

class Meta:

db_table = u'library'

def __unicode__(self):

return self.title

Then you have to create a library/admin.py to tell the admin app

which models to bring in.

from django.contrib import admin from ccom_library.library.models import Author, Bookformat, Library admin.site.register(Author) admin.site.register(Bookformat) admin.site.register(Library)

And for reference, here are the CREATE statements for the tables grabbed from the dump.

CREATE TABLE `author` ( `authorid` int(10) unsigned NOT NULL auto_increment, `fullname` varchar(255) default NULL, `firstname` varchar(50) default NULL, `middlename` varchar(50) default NULL, `lastname` varchar(50) default NULL, `surname` varchar(5) default NULL, `organization` varchar(100) default NULL, PRIMARY KEY (`authorid`) ) ENGINE=InnoDB AUTO_INCREMENT=754 DEFAULT CHARSET=utf8; CREATE TABLE `bookformat` ( `bfid` int(10) unsigned NOT NULL auto_increment, `bookformat` varchar(45) default NULL, PRIMARY KEY (`bfid`) ) ENGINE=InnoDB AUTO_INCREMENT=75 DEFAULT CHARSET=utf8; CREATE TABLE `library` ( `libitemid` int(10) unsigned NOT NULL auto_increment, `title` text, `author` text, `isbn` varchar(64) default NULL, `publisher` varchar(128) default NULL, `bookformat` varchar(45) default NULL, `first` char(2) default NULL, `signed` char(2) default NULL, `pubdate` varchar(32) default NULL, `pubplace` varchar(64) default NULL, `rating` varchar(12) default NULL, `condition` varchar(12) default NULL, `category` varchar(128) default NULL, `value` varchar(6) default NULL, `copies` int(10) unsigned default NULL, `read` char(2) default NULL, `print` char(2) default NULL, `htmlexport` char(2) default NULL, `comment` text, `dateentered` varchar(12) default NULL, `source` varchar(128) default NULL, `cart` char(2) default NULL, `ordered` char(2) default NULL, `lccn` varchar(12) default NULL, `dewey` varchar(12) default NULL, `copyrightdate` varchar(32) default NULL, `valuedate` varchar(24) default NULL, `location` varchar(45) default NULL, `pages` int(10) unsigned default NULL, `series` text, `keywords` text, `dimensions` varchar(64) default NULL, `author2` varchar(128) default NULL, `author3` varchar(128) default NULL, `author4` varchar(128) default NULL, `author5` varchar(128) default NULL, `author6` varchar(128) default NULL, `isbn13` varchar(32) default NULL, `ccomlocid` varchar(10) default NULL, `issn` varchar(20) default NULL, PRIMARY KEY (`libitemid`) ) ENGINE=InnoDB AUTO_INCREMENT=19135 DEFAULT CHARSET=latin1;

03.18.2009 09:20

mysql kickstart in fink

Based on

notes by Rob Braswell...

First install mysql:

First install mysql:

% fink install mysql-client mysqlor

% fink install mysql-ssl-client mysql-sslNow get the server running and make it start at boot.

% sudo daemonic enable mysql % sudo systemstarter start daemonic-mysql % sudo mysqladmin -u root password myrootpasswordCreate a user account for yourself. If you use the nopasswd account, this db will be wide open to trouble.

% echo "CREATE USER $USER;" | mysql -u root -p # For unsecure no pass account... % echo "CREATE USER $USER IDENTIFIED BY 'myuserpassword';" | mysql -u root -p # Better version with passwordGive your account access to all the databases.

% echo "GRANT ALL PRIVILEGES ON *.* TO $USER@\"localhost\";" | mysql -u root -p # No passwd version % echo "GRANT ALL PRIVILEGES ON *.* TO $USER@\"localhost\" IDENTIFIED BY 'myuserpassword';" | mysql -u root -p # Password versionMake a database to which you can add tables. I'm playing with a library dump.

% echo "CREATE DATABASE library;" | mysql % echo "CREATE DATABASE library;" | mysql -p # password versionNow I insert the dump Les made into the local mysql database:

% mysql library < library-dump.sqlWarning: running mysql with no password for a user is only advisable in a sandbox for testing. If you do that in an environment where others can get anywhere near your database, you will be asking for trouble.

03.17.2009 13:36

Right Whales in the NYTimes

Thanks to Janice for this

article:

The Fall and Rise of the Right Whale

The Fall and Rise of the Right Whale

... The researchers, from the National Oceanic and Atmospheric Administration and the Georgia Wildlife Trust, are part of an intense effort to monitor North Atlantic right whales, one of the most endangered, and closely watched, species on earth. As a database check eventually disclosed, the whale was Diablo, who was born in these waters eight years ago. Her calf - at a guess 2 weeks old and a bouncing 12 feet and 2 tons - was the 38th born this year, a record that would be surpassed just weeks later, with a report from NOAA on the birth of a 39th calf. The previous record was 31, set in 2001. ...

03.16.2009 15:35

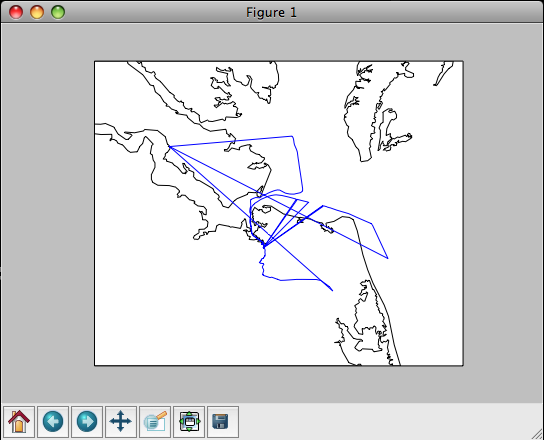

matplotlib basemap with SqlSoup/SqlAlchemy

Here is my first take on trying to

better handle plotting of data from my big database. On a mac,

install matplotlib and basemap (make sure you enable the unstable

tree in fink... run "fink configure"). [Thanks to Dale for the

suggested clarification]

% fink install matplotlib-basemap-data-py25First import needed modules and open the database.

% ipython2.5 -pylab -pprint

from sqlalchemy.ext.sqlsoup import SqlSoup

from sqlalchemy import or_, and_, desc

from sqlalchemy import select, func

db = SqlSoup('sqlite:///ais.db3')

Here is the first style of trying to fetch the data from all

vessels with the invalid MMSI of 0.

p = db.position.filter(db.position.UserID==0).all()

len(p)

Out: 1878

p[0]

Out: MappedPosition(key=54,MessageID=1,RepeatIndicator=0,UserID=0,

NavigationStatus=0,ROT=-128,SOG=Decimal("0"),PositionAccuracy=0,

longitude=Decimal("-76.2985983333"),latitude=Decimal("36.8414283333"),

COG=Decimal("274.7"),TrueHeading=511,TimeStamp=36,RegionalReserved=0,

Spare=0,RAIM=True,state_syncstate=0,state_slottimeout=1,state_slotoffset=0,

cg_r=None,cg_sec=1222819224,

cg_timestamp=datetime.datetime(2008, 10, 1, 0, 0, 24))

x = np.array([float(report.longitude) for report in p])

y = np.array([float(report.latitude) for report in p])

That brings in way too much of the database when I just need x and

y.

pos = db.position._table s = select([pos.c.longitude,pos.c.latitude],from_obj=[pos],whereclause=(pos.c.UserID==0)) track = np.array([ (float(p[0]),float(p[1])) for p in s.execute().fetchall()]) print track [[-76.29859833 36.84142833] [-76.29859333 36.84143833] [-76.29858667 36.84143667] ..., [-76.60878 37.16643833] [-76.60878167 37.16644 ] [-76.60878667 37.16643333]]That's better, but I really need it split into x and y for matplotlib.

s_x = select([pos.c.longitude],from_obj=[pos],whereclause=(pos.c.UserID==0)) s_y = select([pos.c.latitude],from_obj=[pos],whereclause=(pos.c.UserID==0)) track_x = np.array([ float(p[0]) for p in s_x.execute().fetchall()]) track_y = np.array([ float(p[0]) for p in s_y.execute().fetchall()])Now it is time to plot it!

from mpl_toolkits.basemap import Basemap xmin = track_x.min() xmax = track_x.max() ymin = track_y.min() ymax = track_y.max() # The best coastline is the 'h' version. map = Basemap(llcrnrlon=xmin-.25, llcrnrlat=ymin-.25, urcrnrlon=xmax+0.25, urcrnrlat=ymax+.25, resolution='h') map.plot(track_x,track_y) map.drawcoastlines()

03.16.2009 07:51

GPSD and AIS

Eric S. Raymond (ESR) has been

working adding AIS message decoding to GPSD. This is a huge

contribution to the AIS community. My noaadata code is a research

type development as I explored what an AIS message definition XML

dialect might look like. Thanks Eric!

He just sent this email out to the gpsd-dev and gpsd-user mailing lists:

He just sent this email out to the gpsd-dev and gpsd-user mailing lists:

The rtcmdecode utility, formerly a filter that turned RTCM2 and RTCM3 packets into readable text dumps, has been renammed "gpsdecode" and now has tha ability to un-armor and text-dump common AIVDM sentences as well. Specifically, it can crack types 1, 2, 3, 4, 5, 9, and 18. I'd love to support others as well but I don't have regression tests for them. Tip of the hat to Kurt Schwehr of New Hampshire University for supplying test data and text dumps for these. The GPSD references page now includes a detailed description of how to interpret AIDVM, This is significant because public documentation on this has previously been quite poor. Many of the relevant standards are locked up behaind paywalls. Even if they were not, it turns out that assembling a complete picture of how both layers of the protocol work (not to mentional regional variants like St. Lawrence Seaway's AIS extensions like the Coast Guards's PAWSS) is a significant effort. The gpsd daemon itself does not yet do anything with AIVDM packets, though the analysis code in its driver is fully working and it could do anything we want with the data. I could reduce AIS message types 1, 3, 4, 9, and 18 (everything but 5) to O reports, but I'm far from sure this would actually be useful. I think it would make more sense to extend the GPSD protocol so it can report tagged locations (and associated data) from different sensors in the vicinity, including both GPSes and AIS receivers. I'll have more to say about this in an upcoming request for discussion on the protocol redesign.

03.15.2009 10:30

SQLite cheat sheet / tutorial

I don't know if this cheat sheet will

be helpful to others or not, but it will help me spend less time

looking up SQL syntax. I hope that the sections for table one and

table two will be accessable to beginners. This is a different

style than other tutorials I have seen on the web. It is done as a

big SQL file that you can either run at once or paste in line by

line. It only runs on SQLite, but I will try to alter it to work

with PostgreSQL and explain the differences in another post. SQLite

does some quirky things, but it is a good learning environment. For

example, it allows field types that do not make sense:

You can run these commands in one of three ways. The most educational is way is to just run this command:

The above two examples use an "in memory" database, so when you exit, everything goes away. If you want to have a database be saved to disk, do one of these two:

CREATE TABLE foo (a ASDF);ASDF is not a valid SQL type, but the command works.

You can run these commands in one of three ways. The most educational is way is to just run this command:

% sqlite3This will put you into sqlite's shell. You can paste in commands from the text and when you are done, quit it like this:

sqlite> .quitIf you want to quickly see what everything does, try this:

% sqlite3 < sqlite-tutorial.sql | lessThis will let you see both the commands being entered and the results.

The above two examples use an "in memory" database, so when you exit, everything goes away. If you want to have a database be saved to disk, do one of these two:

% sqlite3 demo.db3 % sqlite3 demo.db3 < sqlite-tutorial.sqlAnd now for the big tutorial...

-- This is an SQLite tutorial / cheat sheet by Kurt Schwehr, 2009

-- Conventions:

-- All SQL keyworks are written UPPERCASE, other stuff lowercase

-- All SQLite commands start with a "."

-- Two dashes start a comment in SQL

/* Another style of comments */

----------------------------------------

-- Setup SQLite for the tutorial

----------------------------------------

-- Ask SQLite for instructions

.help

-- Repeat every command for this tutorial

.echo ON

-- Ask SQLite to print column names

.header ON

-- Switch the column separator from '|' to tabs

.separator "\t"

-- Make SQLite print "Null" rather than blanks for an empty field

.nullvalue "Null"

-- Dump the settings before we go on

.show

----------------------------------------

-- Additional resources

----------------------------------------

-- This tutorial misses many important database concepts (e.g. JOIN and SUBQUERIES)

-- http://swc.scipy.org/lec/db.html

----------------------------------------

-- Basics

----------------------------------------

-- All SQL command end with a semicolon

-- Commands to query the database engine start with SELECT.

-- First hello world:

SELECT "hello world";

-- What is the current date and time?

SELECT CURRENT_TIMESTAMP;

-- Simple math

SELECT 1+2;

-- Calling a function

SELECT ROUND(9.8);

-- Multiple columns are separated by ","

SELECT "col1", "col2";

-- Label columns with AS

SELECT 1 AS first, 2 AS second;

----------------------------------------

-- Time to make a database table!

----------------------------------------

-- Create a first table containing two numbers per row

-- The table name follewd by pairs of <variable name>, <type> in ()

CREATE TABLE tbl_one (a INTEGER, b INTEGER, c INTEGER);

-- List all tables

.tables

-- List the CREATE commands for all tables

.schema

-- Add some data to the table

INSERT INTO tbl_one (a,b,c) VALUES (1,2,3);

INSERT INTO tbl_one (a,b,c) VALUES (4,5,6);

INSERT INTO tbl_one (a,b,c) VALUES (4,17,19);

INSERT INTO tbl_one (a,b,c) VALUES (42,8,72);

-- Show all of the data in the tables

SELECT * FROM tbl_one;

-- Show a smaller number of rows from the table

SELECT * FROM tbl_one LIMIT 2;

-- Only show a subset of the rows

SELECT a,c FROM tbl_one;

-- Fetch only rows that meet one criteria

SELECT * FROM tbl_one WHERE a=4;

-- Fetch only rows that meet multiple criteria

SELECT * FROM tbl_one WHERE a=4 AND b=5;

-- Fetch only rows that meet one of multiple criteria

SELECT * FROM tbl_one WHERE a=1 OR b=47;

-- Greater and less than comparisons work too

SELECT * FROM tbl_one WHERE b >= 8;

-- List all that are not equal too

SELECT * FROM tbl_one WHERE a <> 4;

-- Or list from a set of items

SELECT c,b FROM tbl_one WHERE c IN (3, 19, 72);

-- Order the output base on a field / column

SELECT * FROM tbl_one ORDER BY b;

-- Flip the sense of the order

SELECT * FROM tbl_one ORDER BY b DESC;

-- How many rows in the table?

SELECT COUNT(*) FROM tbl_one;

-- List the unique values for column a

SELECT DISTINCT(a) FROM tbl_one;

-- Count the distinct values for column a

SELECT COUNT(DISTINCT(a)) FROM tbl_one;

-- Get the minumum, maximum, average, and total values for column a

SELECT MIN(a),MAX(a), AVG(a), TOTAL(a) FROM tbl_one;

-- Remove a rows from the table

DELETE FROM tbl_one WHERE a=4;

-- See that we have gotten rid of two rows

SELECT * FROM tbl_one;

-- Adding more data. You can leave out the column/field names

INSERT INTO tbl_one VALUES (101,102,103);

-- Delete a table

DROP TABLE tbl_one;

----------------------------------------

-- Time to try more SQL data types

-- There are many more types. For example, see:

-- http://www.postgresql.org/docs/current/interactive/datatype.html

-- WARNING: SQLite plays fast and loose with data types

-- We will pretend this is not so for the next section

-- Make a table with all the different types in it

-- We will step through the types

CREATE TABLE tbl_two (

an_int INTEGER,

a_key INTEGER PRIMARY KEY,

a_char CHAR,

a_name VARCHAR(25),

a_text TEXT,

a_dec DECIMAL(4,1),

a_real REAL,

a_bool BOOLEAN,

a_bit BIT,

a_stamp TIMESTAMP,

a_xml XML

);

-- If we add a row that is just an integer, all the fields

-- except a_key will be empty (called 'Null').

-- If you don't specify the primary key value, it will be

-- created for you.

INSERT INTO tbl_two (a_name) VALUES (42);

-- See what you just added. There will be a number of 'Null' columns

SELECT * FROM tbl_two;

-- A single character

INSERT INTO tbl_two (a_char) VALUES ('z');

-- VARCHAR are length limited strings.

-- The number in () is the maximum number of characters

INSERT INTO tbl_two (a_name) VALUES ('up to 25 characters');

-- TEXT can be any amount of characters

INSERT INTO tbl_two (a_text) VALUES ('yada yada...');

-- DECIMAL specifies an number with a certain number of decimal digits

INSERT INTO tbl_two (a_dec) VALUES

-- Booleans can be true or false

INSERT INTO tbl_two (a_bool) VALUES ('TRUE');

INSERT INTO tbl_two (a_bool) VALUES ('FALSE');

-- Adding bits

INSERT INTO tbl_two (a_bit) VALUES (0);

INSERT INTO tbl_two (a_bit) VALUES (1);

-- Adding timestamps... right now

INSERT INTO tbl_two (a_stamp) VALUES (CURRENT_TIMESTAMP);

-- Date, time, or both. Date is year-month-day.

INSERT INTO tbl_two (a_stamp) VALUES ('2009-03-15');

INSERT INTO tbl_two (a_stamp) VALUES ('9:02:15');

INSERT INTO tbl_two (a_stamp) VALUES ('2009-03-15 9:02:15');

-- Using other timestamps

INSERT INTO tbl_two (a_stamp) VALUES (datetime(1092941466, 'unixepoch'));

INSERT INTO tbl_two (a_stamp) VALUES (strftime('%Y-%m-%d %H:%M', '2009-03-15 14:02'));

-- XML markup

INSERT INTO tbl_two (a_xml) VALUES ('<start>Text<tag2>text2</tag2></start>');

-- Now you can search by columns being set (aka not Null)

SELECT a_key,a_stamp from tbl_two WHERE a_stamp NOT NULL;

----------------------------------------

-- Putting constraints on the database

-- and linking tables

-- NOT NULL forces a column to always have a value.

-- Inserting without a value causes an error

-- UNIQUE enforces that you can not make entries that are the same

-- DEFAULT sets the value if you don't give one

-- REFERENCES adds a foreign key pointing to another table

CREATE TABLE tbl_three (

a_key INTEGER PRIMARY KEY,

a_val REAL NOT NULL,

a_code INTEGER UNIQUE,

a_str TEXT DEFAULT 'I donno',

tbl_two INTEGER,

FOREIGN KEY(tbl_two) REFERENCES tbl_two(a_key)

);

-- a_key and a_str are automatically given values

INSERT INTO tbl_three (a_val,a_code,tbl_two) VALUES (11.2,2,1);

INSERT INTO tbl_three (a_val,a_code,tbl_two) VALUES (12.9,4,3);

-- This would be an SQL error, as the table already has a code of 2

-- INSERT INTO tbl_three (a_code) VALUES (2);

-- This would be an SQL error, as it has no a_val (which is NOT NULL)

--INSERT INTO tbl_three (a_code) VALUES (3);

SELECT * FROM tbl_three;

-- We can now combine the two tables. This is called a 'join'

-- It is not required to have foreign keys for joins

SELECT tbl_three.a_code,tbl_two.* FROM tbl_two,tbl_three WHERE tbl_two.a_key == tbl_three.tbl_two;

----------------------------------------

-- Extras for speed

-- For more on speed, see:

-- http://web.utk.edu/~jplyon/sqlite/SQLite_optimization_FAQ.html

-- TRANSACTIONs let you group commands together. They all work or if there is

-- trouble, they all fail. Transactions are faster than one at a time too!

-- You can also write "COMMIT;" to end a transaction

BEGIN TRANSACTION;

CREATE TABLE tbl_four (

a_key INTEGER PRIMARY KEY,

sensor REAL,

log_time TIMESTAMP);

INSERT INTO tbl_four VALUES (0,3.14,'2009-03-15 14:02');

INSERT INTO tbl_four VALUES (1,3.00,'2009-03-15 14:03');

INSERT INTO tbl_four VALUES (2,2.74,'2009-03-15 14:04');

INSERT INTO tbl_four VALUES (3,2.87,'2009-03-15 14:05');

INSERT INTO tbl_four VALUES (4,3.04,'2009-03-15 14:06');

END TRANSACTION;

-- INDEXes are important if you are searching on a particular field

-- or column often. It might take a while to create the index,

-- but once it is there, searching on that column is faster

CREATE INDEX log_time_indx ON tbl_four(log_time);

-- INDEXes are automatically created for PRIMARY KEYs and UNIQUE fields

-- Run vacuum if you have done a lot of deleting of tables or rows

VACUUM;

----------------------------------------

-- Find out SQLite's internal state

----------------------------------------

SELECT * FROM sqlite_master;

03.14.2009 17:21

PAWSS software specifications?

Can anyone point me to the software

and network interface specifications for the USCG Ports And Waterways

Safety System (PAWSS)

The PAWSS Vessel Traffic Service (VTS) project is a national transportation system that collects, processes, and disseminates information on the marine operating environment and maritime vessel traffic in major U.S. ports and waterways. The PAWSS VTS mission is monitoring and assessing vessel movements within a Vessel Traffic Service Area, exchanging information regarding vessel movements with vessel and shore-based personnel, and providing advisories to vessel masters. Other Coast Guard missions are supported through the exchange of information with appropriate Coast Guard units. ... The VTS system at each port has a Vessel Traffic Center that receives vessel movement data from the Automatic Identification System (AIS), surveillance sensors, other sources, or directly from vessels. Meteorological and hydrographic data is also received at the vessel traffic center and disseminate as needed. A major goal of the PAWSS VTS is to use AIS and other technologies that enable information gathering and dissemination in ways that add no additional operational burden to the mariner. The VTS adds value, improves safety and efficiency, but is not laborious to vessel operators. ... The Coast Guard recognized the importance of AIS and has led the way on various international fronts for acceptance and adoption of this technology. The Coast Guard permits certain variations of AIS in VTS Prince William Sound and has conducted or participated in extensive operational tests of several Universal AIS (ITU-R M.1371) precursors. The most comprehensive test bed has been on the Lower Mississippi River.I don't have any details of the modified messages in Alaska or what is actually transmitted in Mississippi.

03.14.2009 07:44

Visit Mars in google Earth 5.0

I talked about Google Earth's Mars

mode when it first came out, but check out this quick tour of

features.

Google Earth Mars Update - Live Imagery from Another Planet

Google Earth Mars Update - Live Imagery from Another Planet

03.14.2009 06:36

open source bug reporting

One of the best parts of open source

are when people users of software contribute back. Anders Olsson

reported that AIS msg 9 sends knots, not tenths of knots. It makes

sense to be knots as planes and helocopters most of the time can

travel much faster ships.

<!-- AIS MSG 1-3 - Class A vessel position report -->

<field name="SOG" numberofbits="10" type="udecimal">

<description>Speed over ground</description>

<unavailable>102.3</unavailable>

<lookuptable>

<entry key="102.2">102.2 knots or higher</entry>

</lookuptable>

<units>knots</units>

<scale>10</scale>

<decimalplaces>1</decimalplaces>

</field>

<!-- AIS MSG 9 - SAR position report -->

<!-- This is in knots, not tenths like msgs 1-3 -->

<field name="SOG" numberofbits="10" type="udecimal">

<description>Speed over ground</description>

<unavailable>1023</unavailable>

<lookuptable>

<entry key="1022">1022 knots or higher</entry>

</lookuptable>

<units>knots</units>

</field>

This fix will be in the next release of noaadata.03.13.2009 09:35

SqlSoup in SqlAlchemy

Yesterday, Brian C. showed me how

easy it is to access SQLite data from inside of Matlab. Looking at

my code to access the same database in Python with sqlite3, made me

look for an easier way. I looked at SqlAlchemy and was starting to

wonder why it was such a pain to specify all these object

relationships. Then I saw SqlSoup. As

usual, I'm working with AIS data from msgs 1-3 and 5.

% sqlite ais.db3 .schema CREATE TABLE position ( key INTEGER PRIMARY KEY, MessageID INTEGER, RepeatIndicator INTEGER, UserID INTEGER, NavigationStatus INTEGER, ROT INTEGER, SOG DECIMAL(4,1), PositionAccuracy INTEGER, longitude DECIMAL(8,5), latitude DECIMAL(8,5), COG DECIMAL(4,1), TrueHeading INTEGER, TimeStamp INTEGER, RegionalReserved INTEGER, Spare INTEGER, RAIM BOOL, state_syncstate INTEGER, state_slottimeout INTEGER, state_slotoffset INTEGER, cg_r VARCHAR(15), cg_sec INTEGER, cg_timestamp TIMESTAMP ); CREATE TABLE shipdata ( key INTEGER PRIMARY KEY, MessageID INTEGER, RepeatIndicator INTEGER, UserID INTEGER, AISversion INTEGER, IMOnumber INTEGER, callsign VARCHAR(7), name VARCHAR(20), shipandcargo INTEGER, dimA INTEGER, dimB INTEGER, dimC INTEGER, dimD INTEGER, fixtype INTEGER, ETAminute INTEGER, ETAhour INTEGER, ETAday INTEGER, ETAmonth INTEGER, draught DECIMAL(3,1), destination VARCHAR(20), dte INTEGER, Spare INTEGER, cg_r VARCHAR(15), cg_sec INTEGER, cg_timestamp TIMESTAMP );Here is a quick example of looking at the data with SqlSoup:

% ipython

from sqlalchemy.ext.sqlsoup import SqlSoup

db = SqlSoup('sqlite:///ais.db3')

db.position.get(10)

Out: MappedPosition(key=10,MessageID=1,RepeatIndicator=0,UserID=366994950,

NavigationStatus=7,ROT=-128,SOG=Decimal('0.1'),PositionAccuracy=0,

longitude=Decimal('-76.0895316667'),latitude=Decimal('36.90689'),

COG=Decimal('87.8'),TrueHeading=511,TimeStamp=7,RegionalReserved=0,

Spare=0,RAIM=False,state_syncstate=0,state_slottimeout=3,

state_slotoffset=31,cg_r=None,cg_sec=1222819204,

cg_timestamp=datetime.datetime(2008, 10, 1, 0, 0, 4))

p = db.position.get(10)

p.longitude,p.latitude,p.UserID,p.cg_sec

Out[8]: (Decimal('-76.0895316667'), Decimal('36.90689'), 366994950, 1222819204)

I still have more to figure out with SqlSoup and it would be great

to make this work seamlessly with matplotlib. Also, Elixir looks very much like

the Django ORM.03.12.2009 08:11

AIS NMEA message lookup table

The first letter of the 6th field of

an AIS AIVDM NMEA string determines the message type (at least for

the the first scentence of a message). For single sentence (aka

line) messages, this makes using egrep really easy. The class A

position reports start with 1, 2, or 3. Here is a little bashism to

loop over log files and get the position records

I created the above table like this using my noaadata python package:

for file in log-20??-??-??; do

egrep 'AIVDM,1,1,[0-9]?,[AB],[1-3]' $file > ${file}.123

done

Here is my handy table of first characters:

Msg# 1st Char Message type === === ============ 0 0 Not used 1 1 Position report Class A 2 2 Position report Class A (Assigned schedule) 3 3 Position report Class A (Response to interrogation) 4 4 Base station report 5 5 Ship and voyage data 6 6 Addressed binary message (ABM) 7 7 Binary ack for addressed message 8 8 Binary broadcast message (BBM) 9 9 Airborn SAR position report 10 : Request UTC date 11 ; Current UTC date 12 < Addressed safety message 13 = Addressed safety ack 14 > Broadcast safety message 15 ? Interrogation request for a specific message 16 @ Assignment of report behavior 17 A DGNSS corrections from a basestation 18 B Position report Class B 19 C Position report Class B, Extended w/ static info 20 D Reserve slots for base stations 21 E Aid to Navigation (AtoN) status report 22 F Channel management 23 G Group assignment for reporting 24 H Static data report. A: Name, B: static data 25 I Binary message, single slot (addressed or broadcast) 26 J Multislot binary message with comm status (Addr or Brdcst)Things get a bit more tricky with multi-scentence messages. You have to normalize the ais messages to collapse the several lines into one very long line that violates the NMEA specification, which is in my opinion a very good thing to do.

% egrep -v 'AIVDM,1,1,[0-9]?,[AB]' file.ais | ais_normalize.py > file.ais.normNow apply grep like I showed above on the normalized file that only has the multi scentence messages.

I created the above table like this using my noaadata python package:

% ipython

import ais.binary

for i in range (30):

print i,ais.binary.encode[i]

03.11.2009 06:10

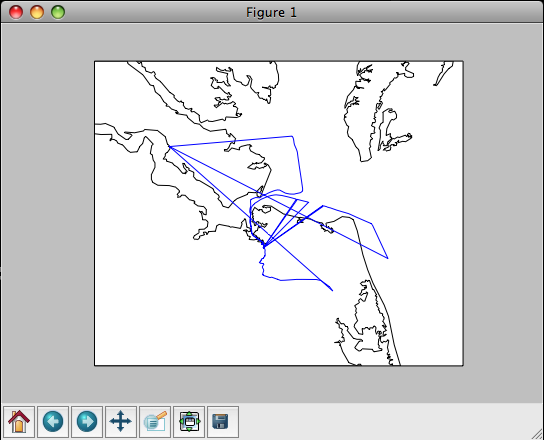

Cosco Busan USCG Marine Causualty Report

Coast Guard releases Cosco Busan marine casualty investigation

report [Coast Guard News]

Report of Investigation into the Allision of the Cosco Busan with the Delta tower of the San Francisco-Oakland Bay Bridge In San Francisco Bay on November 7, 2007 [pdf]

From the report, I can get the id that identifies this incident to the USCG:

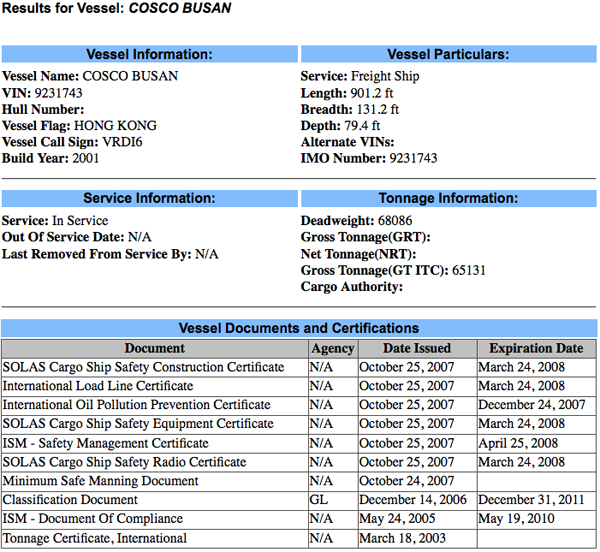

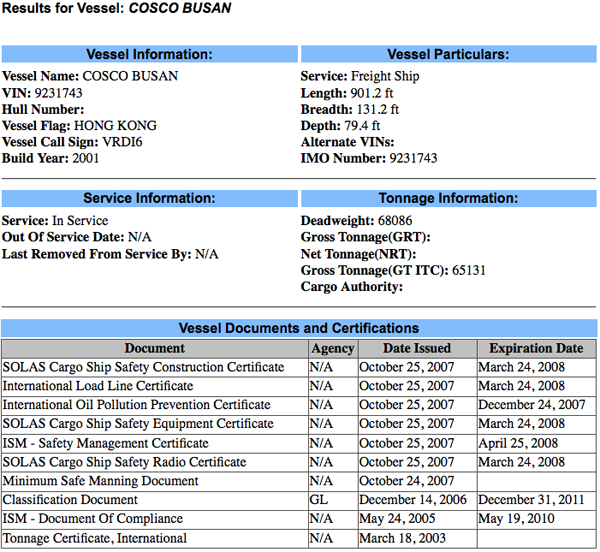

http://cgmix.uscg.mil/PSIX/PSIXDetails.aspx?VesselID=512403

This shows us that the Cosco Busan was recertified on Oct 25, 2007. Since I have the MISLE Activity number, I should be able to go to Incident Investigation Reports (IIR) Search and get the report. Entering 3095030 in the search field currently gives no results. Hopefully, the Cusco Busan report will end up in the IIR Search page soon.

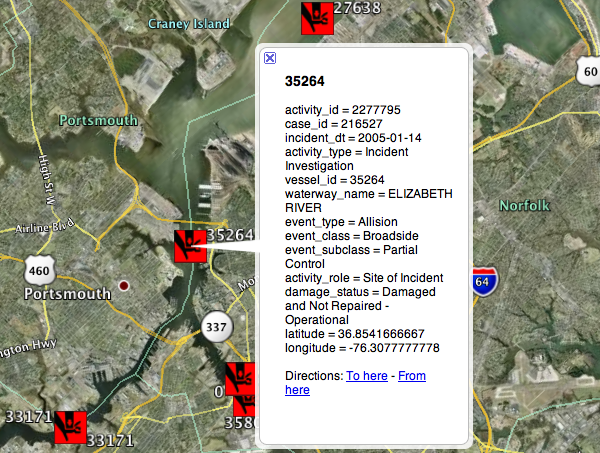

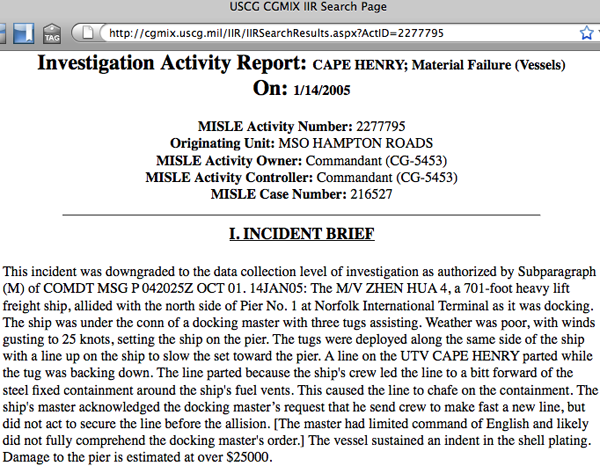

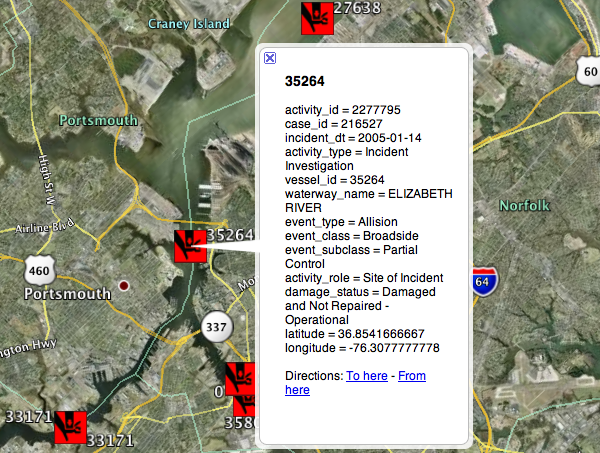

For illustration purposes, here is what another incident returns (this is the one I always seem to show as it is in Norfolk). Use activity id of 2277795:

There is an entry for each vessel and each involved organization, but these all point to the same report: ActID=2277795

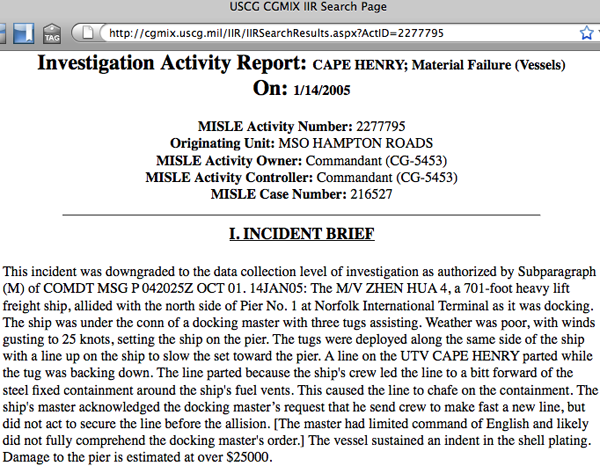

And in my Google Earth visualization of MISLE:

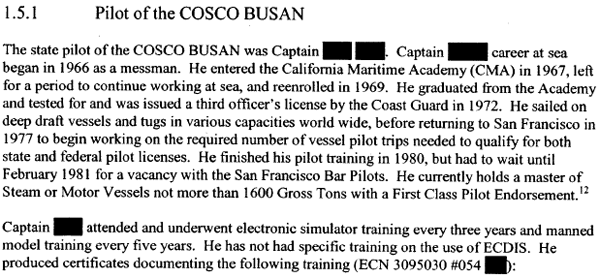

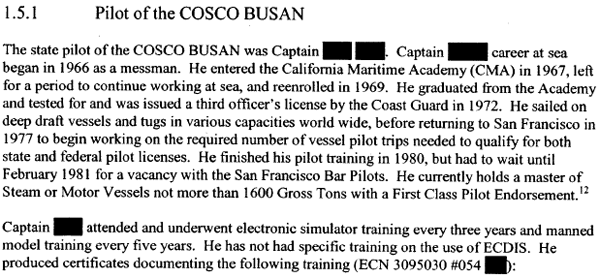

Interesting to see what they black out in the report. I think everybody knows the Pilot's name at this point.

Report of Investigation into the Allision of the Cosco Busan with the Delta tower of the San Francisco-Oakland Bay Bridge In San Francisco Bay on November 7, 2007 [pdf]

From the report, I can get the id that identifies this incident to the USCG:

MISLE Activity Number: 3095030First, lets see what the USCG says about the Vessel. Go to the Port State Information eXchange Search and put "Cosco Busan" in the Vessel Name field. This results in the following URL:

http://cgmix.uscg.mil/PSIX/PSIXDetails.aspx?VesselID=512403

This shows us that the Cosco Busan was recertified on Oct 25, 2007. Since I have the MISLE Activity number, I should be able to go to Incident Investigation Reports (IIR) Search and get the report. Entering 3095030 in the search field currently gives no results. Hopefully, the Cusco Busan report will end up in the IIR Search page soon.

For illustration purposes, here is what another incident returns (this is the one I always seem to show as it is in Norfolk). Use activity id of 2277795:

There is an entry for each vessel and each involved organization, but these all point to the same report: ActID=2277795

And in my Google Earth visualization of MISLE:

Interesting to see what they black out in the report. I think everybody knows the Pilot's name at this point.

03.10.2009 16:44

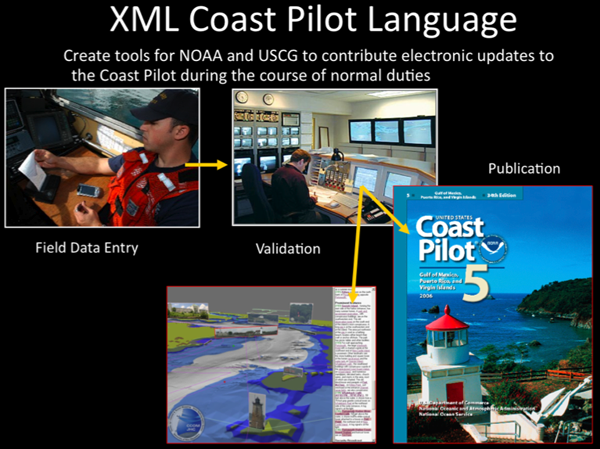

Managing distributed XML document editing for nautical publications

I am looking for comments and

discussion about this post. Please see my LiveJournal post

to make comments.

Warning: This is a thought experiment meant to generate conversation. It's in no way polished.

Recently, I posted how Matt and I think the XML markup might look for a geospatially enabled nautical publication. There are details missing including the geographic feature table. GeoZui was originally built with Tcl/Tk, so it was easiest for Matt to his familiarity with Tcl to build a quick table. There is an even bigger issue looming to the general problem. The traditional view of updating nautical publications seems to be very much like the traditional linear model of a book. There is a key author at the Hydrographic Organization (HO) and maybe some editors. This has worked effectively for the last century. We could take the current PDF and paper product and convert it to a new XML format and use traditional tools such as text editors and revision control (e.g. subversion [svn]) or a database and make it work.

However, I'd like to take a step back and imagine that we are starting from scratch with no pre-existing infrastructure. Throw away any constraints that particular countries might have and ask: What would be the best system that we could build now that would work for the next century? I know this is a bit ridiculous, but humor me for now.

A use case

NOTE: There should be many more use cases for this topic, but let's start with one.

What I think there needs to be is a traceable cycle of information. In the field or on the ship, anyone should be able to make a note and/or take a picture and submit an error, update, addition, or suggestion back to the HO. Let me give an example to illustrate how the cycle might work. A mariner enters a port and sees that there is a new pier on the south side of the harbor replacing an older pier with a different configuration. The person pulls out their mobile phone (iPhone, Android, CrackBerry, etc) and runs a submit Coast Pilot (CP) note application. The app uses the phone's builtin camera to grab a picture, tags the picture with the GPS position from the builtin GPS, then queues the update. Later, when the mobile phone detects the person is no longer moving, the phone puts up a notice that there is a submission waiting. The user takes a moment to write that the pier is different and hits the send button. The phone sends the update to a public website for review. Local mariners comment on the website that the pier is indeed different and give the name of the company working on the pier. The HO is notified of the update, reviews the extra comments, and spends a little bit of money for an up-to-date satellite orthophoto. The HO digitizes the pier and sends notices to the charting branch and the nautical publications. In the nautical publications group, an author calls up a list of all the sections of text that refer to the pier and makes any changes necessary after talking to the pier authorities. The text changes are tagged with all the information about the edit including references to the initial submission in the field. The changes are passed to the editor for review. Once these changes are accepted a new coast pilot is generated, the submission note is marked as finished, and all the systems that use the coast pilot see the update and adjust their products.

This above is just an instance of what is called a "use case" in software engineering terminology. Try not get hung up on the details such as construction permitting in some countries already mandates submitting changes to the HO. The goal is to think about what a workflow might look like and what tools could support the process. How can we work with a marked up text document that makes it easy for the people editing and allows us to see where any of the text came from (aka provenance)? I don't know what the answer is, but talking to people at SNPWG brought up a number of ideas. Here are some of the thoughts that I've had and I would really like to hear what others think.

What this post is not

This is in no way a complete run through what needs to be discussed!

Another important topic is managing image submissions. We would like to be able to avoid trouble with copyright or at least track who controls the rights. Georeferencing, feature identification and tagging will be important. I will try to talk more about this some other time.

These are in no particular order and I'm leaving out a number of commercial solutions (e.g. Visual Studio Team Solution. And I'm mixing types of technology.

The Usual Suspects

These are tools that have been used in the last 20 years to produce nautical publications. This is probably a fairly traditional style of creating publications.

Microsoft Word has been used to create all sorts of documents. It can do indexing, change tracking, comments, and all sorts of other fancy things. It tries to be everything to everybody, but trying to manage large teams using track changes is enough to make me go crazy. Plus, you never know what Microsoft will decide to do with Word in the future. I've seen people who are power users of Word and they do amazing things. But can a Word document handle 20 years of change tracking?

Adobe FrameMaker (or just Frame) has been the solution for team based, large document generation. I've used Frame and seen groups such as Netscape's directory server (slapd) Team produce incredibly intricate documents. MS Word is still trying to catch up to the power of Frame in 1997. And that's before XML came into the core of Frame. However, it does seem like Adobe really doesn't want to keep Frame alive. Frame supports DITA, which I will talk about later.

Several people have told me that they see Adobe replacing Frame with InDesign. I really do not see how InDesign can cope with the workflow of a constantly updating document such as a Coast Pilot. Perhaps there is more to InDesign than I realize.

Revision Control Systems With my background, the first thing that came to my mind is some sort of distributed revision control system / source control system (SCM). My experience has been mostly with RCS, CVS, and SVN, which are centralized systems. Therefore, I am a bit out of my league here. There are a number of open source implementations: git, GNU arch, Mercurial (hg), Bazaar, darcs, monotone, svn+svk, and a host of others. Perhaps one of these really is the best solution if it were to be integrated with a nice editor and interfaces to easily allow tracing of the origin of the text. Can we add functionality to record that a particular change in the text goes to a particular change submission from the field? Trac does something similar with svn repositories. If you commit a change to svn, you can reference a ticket in Trac. Adding Trac tickets in commits is not enforced or supported in any of the interfaces, so you have to know how to tag ticket references. If this is the right solution, how do we build a system that keeps the writers from having to become a pro at version control systems? The HO staff should be focused on the maritime issues and their skills should be focused on the writing.

Or could this be done on top of a non-distributed version control system? I am learning towards rejecting the idea of something like straight svn. You would like to be able to send nautical publications writers out in the field and be able to have them commit changes without having to have internet access. This is especially important in polar regions. You can not always count on internet access and Iridium's bandwidth is just not large. The "svn blame" command illustrates a little bit of the idea of being able to see the source of text.

If I were to ask a number of people at NASA who I've worked with in the past, I'm sure they would tell me they could build the killer nautical publications collaboration system on top of Eclipse complete with resource management, fuel budgets, and AI to try to detect issues in the text.

Sharepoint

Microsoft SharePoint appears at first look to be the sort of tool that the community needs to manage nautical publications. Documents can be edited on the server (windows only), checked out, tracked, and so forth. However, the versioning of SharePoint and the documents it contains are not really linked. Can SharePoint be made to understand what is different about files and can it build workflows? My experience with SharePoint has been very frustrating. If feels like a funky front end to a version control system that doesn't have all the features of version control that people count on.

There are a whole slew of SharePoint clones or workalikes (e.g. Document Repository System or OpenDocMan). This is definitely a space to watch for innovation.

DocBook

Looking at technologies such as LaTeX are traditionally combined to write papers and work well with being tracked in a revision control system. LaTeX does not have the mechanism to store extra information such as provenance or location, so it doesn't make sense. What would Donald Knuth say about a geospatially aware LaTeX?

Perhaps another possibility it to work with the tools around DocBook? There are many tools built around DocBook. For example, calenco is a collaborative editing web platform. I have no experience with kind of thing, but perhaps the DocBook tools could work with other XML types such as a nautical publication schema. Another tool that looks like it hasn't seen any update in a long time is: OWED - Online Wiki Editor for DocBook

There many other document markup languages (e.g. S1000D). Are any of them useful to this kind of process? Wikipedia has a Comparison of document markup languages that cover many more.

Databases

What support do traditional relational databases have for this kind of task? I've looked at how wikis such as Trac or Mediawiki store their entries and they are fairly simple systems that store many copies of a document each with a revision tag: Trac schema and Mediawiki schemas

Perhaps there are better technologies built directly into database systems? There is SQL/XML [ISO International Standard ISO/IEC 9075-14:2003] (SQL/XML Tutorial), which might have useful functionality, but I have not had time to look into it.

Oracle has Content Management, XML, and Text. I don't use Oracle and don't know much about what they have to offer.

PostgreSQL has a lot of powerful features including XML Support with the XML Type

Content Management Systems (CMS)

This topic sounds exactly like what we need, but having used a number of these systems, I think they miss out on many of the tracking features that are needed and they are mostly focused on the HTML output side of things. There are a huge number of systems to choose from as shown by Wikipedia's List of content management systems. The run the range from wikis (e.g. TWiki) to easy to deploy web systems (e.g. Joomla!), all the way to frameworks that have you build up your own system (e.g. Django or Ruby on Rails). I don't think the prebuilt systems are really up for this kind of task, but combining a powerful framework with the right database technology or version control system might well be a good answer.

Darwin Information Typing Architecture (DITA)

DITA sounds very interesting, but might not be capable of helping documents like nautical publications that have less structure.

Closing thoughts

As you can see from the above text, my ideas on this topic are far from fully formed. I want to leave you with a couple wikipedia topics: I looking for opinions on all sides of the technology and editing spectrum. I am sure many publishers have faced and survived this problem over the years. I definitely ran out of steam on this post and it's probably my largest post where I'm not quoting some large document or code. Hopefully the above made some sense.

Update 2009-Mar-14: Ask Slashdot has a similar topic - Collaborative Academic Writing Software?. And thanks go to Trey and MDP for positing comments on the live journal post.

Warning: This is a thought experiment meant to generate conversation. It's in no way polished.

Recently, I posted how Matt and I think the XML markup might look for a geospatially enabled nautical publication. There are details missing including the geographic feature table. GeoZui was originally built with Tcl/Tk, so it was easiest for Matt to his familiarity with Tcl to build a quick table. There is an even bigger issue looming to the general problem. The traditional view of updating nautical publications seems to be very much like the traditional linear model of a book. There is a key author at the Hydrographic Organization (HO) and maybe some editors. This has worked effectively for the last century. We could take the current PDF and paper product and convert it to a new XML format and use traditional tools such as text editors and revision control (e.g. subversion [svn]) or a database and make it work.

However, I'd like to take a step back and imagine that we are starting from scratch with no pre-existing infrastructure. Throw away any constraints that particular countries might have and ask: What would be the best system that we could build now that would work for the next century? I know this is a bit ridiculous, but humor me for now.

A use case

NOTE: There should be many more use cases for this topic, but let's start with one.

What I think there needs to be is a traceable cycle of information. In the field or on the ship, anyone should be able to make a note and/or take a picture and submit an error, update, addition, or suggestion back to the HO. Let me give an example to illustrate how the cycle might work. A mariner enters a port and sees that there is a new pier on the south side of the harbor replacing an older pier with a different configuration. The person pulls out their mobile phone (iPhone, Android, CrackBerry, etc) and runs a submit Coast Pilot (CP) note application. The app uses the phone's builtin camera to grab a picture, tags the picture with the GPS position from the builtin GPS, then queues the update. Later, when the mobile phone detects the person is no longer moving, the phone puts up a notice that there is a submission waiting. The user takes a moment to write that the pier is different and hits the send button. The phone sends the update to a public website for review. Local mariners comment on the website that the pier is indeed different and give the name of the company working on the pier. The HO is notified of the update, reviews the extra comments, and spends a little bit of money for an up-to-date satellite orthophoto. The HO digitizes the pier and sends notices to the charting branch and the nautical publications. In the nautical publications group, an author calls up a list of all the sections of text that refer to the pier and makes any changes necessary after talking to the pier authorities. The text changes are tagged with all the information about the edit including references to the initial submission in the field. The changes are passed to the editor for review. Once these changes are accepted a new coast pilot is generated, the submission note is marked as finished, and all the systems that use the coast pilot see the update and adjust their products.

This above is just an instance of what is called a "use case" in software engineering terminology. Try not get hung up on the details such as construction permitting in some countries already mandates submitting changes to the HO. The goal is to think about what a workflow might look like and what tools could support the process. How can we work with a marked up text document that makes it easy for the people editing and allows us to see where any of the text came from (aka provenance)? I don't know what the answer is, but talking to people at SNPWG brought up a number of ideas. Here are some of the thoughts that I've had and I would really like to hear what others think.

What this post is not

This is in no way a complete run through what needs to be discussed!

Another important topic is managing image submissions. We would like to be able to avoid trouble with copyright or at least track who controls the rights. Georeferencing, feature identification and tagging will be important. I will try to talk more about this some other time.

These are in no particular order and I'm leaving out a number of commercial solutions (e.g. Visual Studio Team Solution. And I'm mixing types of technology.

The Usual Suspects

These are tools that have been used in the last 20 years to produce nautical publications. This is probably a fairly traditional style of creating publications.

Microsoft Word has been used to create all sorts of documents. It can do indexing, change tracking, comments, and all sorts of other fancy things. It tries to be everything to everybody, but trying to manage large teams using track changes is enough to make me go crazy. Plus, you never know what Microsoft will decide to do with Word in the future. I've seen people who are power users of Word and they do amazing things. But can a Word document handle 20 years of change tracking?

Adobe FrameMaker (or just Frame) has been the solution for team based, large document generation. I've used Frame and seen groups such as Netscape's directory server (slapd) Team produce incredibly intricate documents. MS Word is still trying to catch up to the power of Frame in 1997. And that's before XML came into the core of Frame. However, it does seem like Adobe really doesn't want to keep Frame alive. Frame supports DITA, which I will talk about later.

Several people have told me that they see Adobe replacing Frame with InDesign. I really do not see how InDesign can cope with the workflow of a constantly updating document such as a Coast Pilot. Perhaps there is more to InDesign than I realize.