04.30.2010 17:13

Frustration with C++ and Python

There are just too many ways to do this with python and I have yet to

find the right documentation that gets me going. It seems like such a

simple task, but I keep getting frustrated. I would like to create a

python wrapper for some pretty simple C++ code. There are lots of

ways to approach this, but I really wanted to keep it simple. The

options are rewrite this as pure C (no thanks, but that is what

#python suggested), figure out my C++ linking issues, use swig, pyrex,

ctypes, boost (ack... no, trying to keep this simple), embedc, cython, instant, SIP, PyCXX, and I'm sure I

missed a few others. I really would like to use CPython to keep the

dependencies to a minimum. All I want to do is call a function with a

string parameter and have it return a dictionary based on a bunch of

C++ work. When I start putting C++ stuff in there, the world blows

up... I found some documentation saying that -fno-line would fix

this, but it does not.

http://paste.lisp.org/display/98607

Comments can go here: C++ python - need help

Update 2010-May-01: Thanks to Dane (Springmeyer++), now know that it's no me, it's the particular version of python and/or gcc. The default of building 64bit code is tripping me up. This works:

CC=/sw/bin/g++-4 CFLAGS=-m32 /sw/bin/python setup.py build_ext

Running build_ext

building 'ais' extension

g++ -L/sw32/lib -bundle -L/sw32/lib/python2.6/config -lpython2.6 -m32 build/temp.macosx-10.6-i386-2.6/ais.o -o build/lib.macosx-10.6-i386-2.6/ais.so

Undefined symbols:

"std::ctype<char>::_M_widen_init() const", referenced from:

_decode in ais.o

ld: symbol(s) not found

collect2: ld returned 1 exit status

error: command 'g++' failed with exit status 1

It seems pretty confusing too how I should do this from setup.py. For

straight C modules, it handles all the compiler issues for you. I

really need a simple example of C++ code being called and link

correctly so I can load it from Python.

http://paste.lisp.org/display/98607

Comments can go here: C++ python - need help

Update 2010-May-01: Thanks to Dane (Springmeyer++), now know that it's no me, it's the particular version of python and/or gcc. The default of building 64bit code is tripping me up. This works:

CFLAGS=-m32 /sw32/bin/python setup.py buildThese are on Mac OSX 10.6.3 Using the apple version of python 2.6.1:

/usr/bin/python setup.py build running build running build_ext building 'ais' extension creating build creating build/temp.macosx-10.6-universal-2.6 gcc-4.2 -fno-strict-aliasing -fno-common -dynamic -DNDEBUG -g -fwrapv -Os -Wall -Wstrict-prototypes -DENABLE_DTRACE -arch i386 -arch ppc -arch x86_64 -pipe -I/System/Library/Frameworks/Python.framework/Versions/2.6/include/python2.6 -c ais.cpp -o build/temp.macosx-10.6-universal-2.6/ais.o cc1plus: warning: command line option "-Wstrict-prototypes" is valid for C/ObjC but not for C++ cc1plus: warning: command line option "-Wstrict-prototypes" is valid for C/ObjC but not for C++ cc1plus: warning: command line option "-Wstrict-prototypes" is valid for C/ObjC but not for C++ creating build/lib.macosx-10.6-universal-2.6 g++-4.2 -Wl,-F. -bundle -undefined dynamic_lookup -arch i386 -arch ppc -arch x86_64 build/temp.macosx-10.6-universal-2.6/ais.o -o build/lib.macosx-10.6-universal-2.6/ais.soAnd it turns out on closer inspection that it is gcc at fault, not python. If I specify the apple supplied gcc with the fink python, then it builds.

CC=/usr/bin/gcc CFLAGS=-m32 /sw32/bin/python setup.py build_ext running build_ext building 'ais' extension creating build creating build/temp.macosx-10.6-i386-2.6 /usr/bin/gcc -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -m32 -I/sw32/include/python2.6 -c ais.cpp -o build/temp.macosx-10.6-i386-2.6/ais.o cc1plus: warning: command line option "-Wstrict-prototypes" is valid for C/ObjC but not for C++ creating build/lib.macosx-10.6-i386-2.6 g++ -L/sw32/lib -bundle -L/sw32/lib/python2.6/config -lpython2.6 -m32 build/temp.macosx-10.6-i386-2.6/ais.o -o build/lib.macosx-10.6-i386-2.6/ais.soAnd when I've done that, it works just fine using the C++ standard library (tested here by using iostream's cout):

ipython

In [1]: import ais

In [2]: ais. # Then hit tab

ais.__class__ ais.__doc__ ais.__getattribute__ ais.__name__ ais.__reduce__ ais.__setattr__ ais.__subclasshook__

ais.__delattr__ ais.__file__ ais.__hash__ ais.__new__ ais.__reduce_ex__ ais.__sizeof__ ais.cpp

ais.__dict__ ais.__format__ ais.__init__ ais.__package__ ais.__repr__ ais.__str__ ais.decode

In [2]: ais.decode('hello')

nmea_payload: hello

Out[2]: -666

Now to go write some code to actually get some work done.

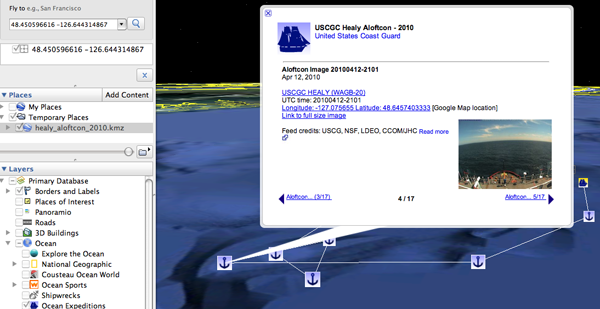

04.29.2010 08:35

SpaceQuest receiving AIS SART messages from orbit

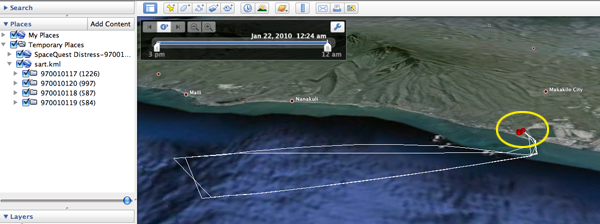

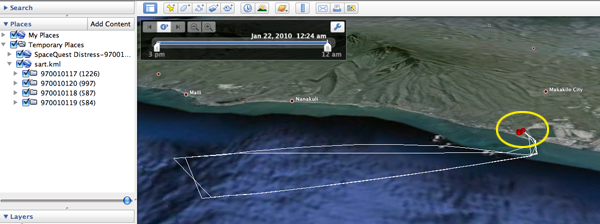

SpaceQuest has been kind enough to share what they received from the

Honolulu, HI AIS

Search and Rescue Transmitter (SART) test by the USCG. I have

layed out the 3 MMSI's received from 3 of the USCG's NAIS stations

(white lines) with the SpaceQuest received messages. They got 42

receives from the test. Pretty impressive for a receiver that is

whipping past in a low orbit. These messages are a class A position message

with a special MMSI. First an example receive from the USCG:

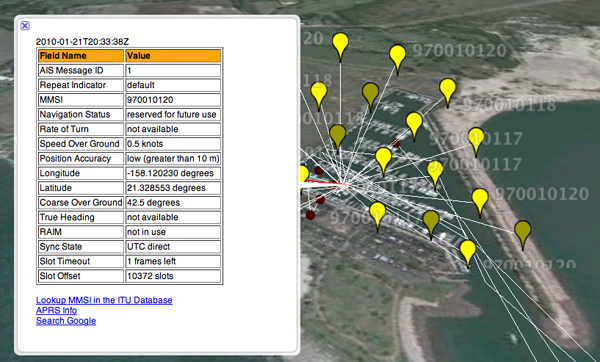

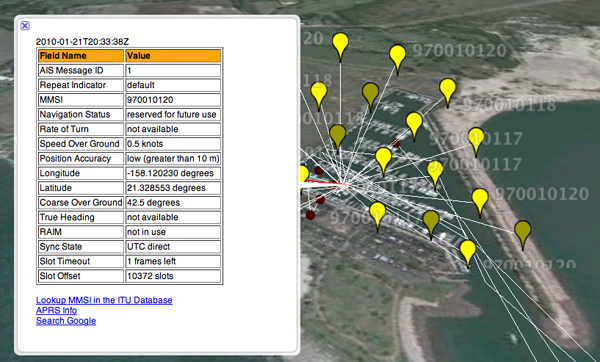

Here are the two data sets together:

Zooming in on the SpaceQuest receives:

The lesson here is that AIS SARTs can be heard from orbit, but make sure you keep the device on for a while - with an orbital period of 90 minutes, you can't just pulse it on and expect to be heard when you are far from the coast.

There was an announcement from ORBCOMM about AIS SART receives, but they never showed any actual results: Satellite AIS Proves to Be Effective in Maritime Search and Rescue Operations [Marine Safety News]

I am sure there will be some good AIS SART discussions at the San Diego RTCM meeting, but I will not be attending this year. I hope there are some bloggers in attendance this year so I can follow along.

!AIVDM,1,1,,A,1>M4f1vP00Dd;bl<=50tH?wd08?E,0*52,d-106,S9999,r14xkaa1,1264100667

| Field Name | Type | Value | Value in Lookup Table | Units |

|---|---|---|---|---|

| MessageID | uint | 1 | 1 | |

| RepeatIndicator | uint | 0 | default | |

| UserID/MMSI | uint | 970010119 | 970010119 | |

| NavigationStatus | uint | 14 | reserved for future use | |

| ROT | int | -128 | -128 | 4.733*sqrt(val) °/min |

| SOG | udecimal | 0 | knots | |

| PositionAccuracy | uint | 0 | low (> than 10 m) | |

| longitude | decimal | -158.12038333 | -158.12038333 | ° |

| latitude | decimal | 21.328645 | 21.328645 | ° |

| COG | udecimal | 316.8 | 316.8 | ° |

| TrueHeading | uint | 511 | 511 | ° |

| TimeStamp | uint | 54 | seconds | |

| RegionalReserved | uint | 0 | 0 | |

| Spare | uint | 0 | 0 | |

| RAIM | bool | False | not in use |

Here are the two data sets together:

Zooming in on the SpaceQuest receives:

The lesson here is that AIS SARTs can be heard from orbit, but make sure you keep the device on for a while - with an orbital period of 90 minutes, you can't just pulse it on and expect to be heard when you are far from the coast.

There was an announcement from ORBCOMM about AIS SART receives, but they never showed any actual results: Satellite AIS Proves to Be Effective in Maritime Search and Rescue Operations [Marine Safety News]

I am sure there will be some good AIS SART discussions at the San Diego RTCM meeting, but I will not be attending this year. I hope there are some bloggers in attendance this year so I can follow along.

04.28.2010 21:00

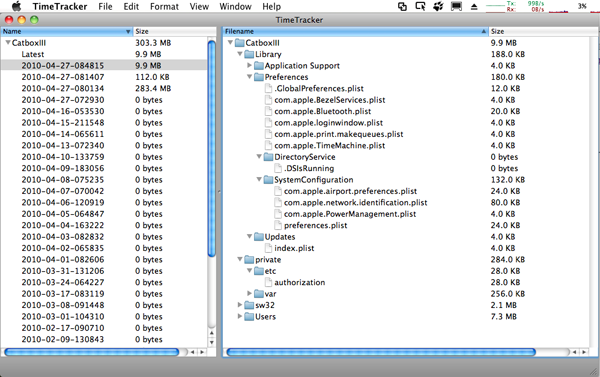

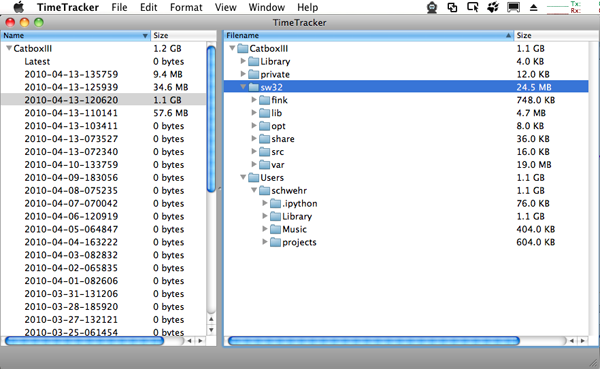

Using Apple's TimeMachine to inspect an update

I was curious changes were made in a recent Apple security update. I

realized that I now have an easy way that packaging tripwire for fink

to see what changed: TimeMachine. I backed up my laptop, installed

the update, and did another backup right after the machine came back

up. I then loaded TimeTracker and looked at the differences.

04.27.2010 17:38

Using C++ BitSet to handled signed integers

For AIS, we have to be able to support signed integers from 2 to 32

bits long. These are implemented using the standard Two's

complement notation. I want C++ code that is easy to understand

and reasonably fast. I can be sure that this is a lot slower than it

could be, but I will take the clarity over speed and I am sure that it

is still 50 times faster than my python. I am also pretty sure that I

have byte order issues ahead of me, but I am seeing the same values on

both intel and PPC macs.

Two's complement is easy to extent to higher bit counts without changing doing any hard work: just extend the highest bit out. Positive numbers have a 0 as their highest bit while negative numbers have a 1. For example 10 is is -2. 110 is also -2 as is 11111110. gcc -Os bits.c -std=c99 -c

gcc -Os bits.c -std=c99 -c

The original from gpsd is more like 1148 bytes. Going back into the C/C99/C++ world activates a weird part of my brain... I get crazy about correctness and tweaking things to be just right. When I code python, I have more fun and am much more relaxed.

Two's complement is easy to extent to higher bit counts without changing doing any hard work: just extend the highest bit out. Positive numbers have a 0 as their highest bit while negative numbers have a 1. For example 10 is is -2. 110 is also -2 as is 11111110.

#include <iostream>

#include <bitset>

#include <cassert>

using namespace std;

// Hope this stuff is 32 / 64 bit clean

typedef union {

long long_val;

unsigned long ulong_val;

} long_union;

template<size_t T> int signed_int(bitset<T> bs) {

assert (T <= sizeof(long));

bitset<32> bs32;

if (1 == bs[T-1] ) bs32.flip(); // pad 1's to the left if negative

for (size_t i=0; i < T; i++) bs32[i] = bs[i];

long_union val;

val.ulong_val = bs32.to_ulong();

return val.long_val;

}

int main(int argc, char*argv[]) {

bitset<2> bs2;

int bs_val;

bs2[0] = 0; bs2[1] = 0; bs_val = signed_int(bs2); cout << "2 " << bs2 << ": " << bs_val << endl;

bs2[0] = 1; bs2[1] = 0; bs_val = signed_int(bs2); cout << "2 " << bs2 << ": " << bs_val << endl;

cout << endl;

bs2[0] = 1; bs2[1] = 1; bs_val = signed_int(bs2); cout << "2 " << bs2 << ": " << bs_val << endl;

bs2[0] = 0; bs2[1] = 1; bs_val = signed_int(bs2); cout << "2 " << bs2 << ": " << bs_val << endl;

cout << endl;

bitset<4> bs4;

bs4[3] = 0; bs4[2] = 0; bs4[1] = 0; bs4[0] = 0; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

bs4[3] = 0; bs4[2] = 0; bs4[1] = 0; bs4[0] = 1; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

bs4[3] = 0; bs4[2] = 1; bs4[1] = 1; bs4[0] = 1; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

cout << endl;

bs4[3] = 1; bs4[2] = 1; bs4[1] = 1; bs4[0] = 1; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

bs4[3] = 1; bs4[2] = 1; bs4[1] = 1; bs4[0] = 0; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

bs4[3] = 1; bs4[2] = 0; bs4[1] = 1; bs4[0] = 0; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

bs4[3] = 1; bs4[2] = 0; bs4[1] = 0; bs4[0] = 1; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

bs4[3] = 1; bs4[2] = 0; bs4[1] = 0; bs4[0] = 0; bs_val = signed_int(bs4); cout << "4 " << bs4 << ": " << bs_val << endl;

return 0;

}

Here are the results (with a couple other cases thrown in):

2 00: 0 2 01: 1 2 11: -1 2 10: -2 4 0000: 0 4 0001: 1 4 0111: 7 4 1111: -1 4 1110: -2 4 1010: -6 4 1001: -7 4 1000: -8 16 0000000000000000: 0 16 1111111111111111: -1 16 1111111111111110: -2 24 000000000000000000000000: 0 24 111111111111111111111111: -1 24 111111111111111111111110: -2 24 111111111111111011111110: -258To give you a sense of what a much faster implementation looks like, check out what is in GPSD (truncated by me):

for (cp = data; cp < data + strlen((char *)data); cp++) {

ch = *cp;

ch -= 48;

if (ch >= 40) ch -= 8;

for (i = 5; i >= 0; i--)

if ((ch >> i) & 0x01) ais_context->bits[ais_context->bitlen / 8] |= (1 << (7 - ais_context->bitlen % 8));

ais_context->bitlen++;

}

}

// ...

#define BITS_PER_BYTE 8

#define UBITS(s, l) ubits((char *)ais_context->bits, s, l)

#define SBITS(s, l) sbits((char *)ais_context->bits, s, l)

#define UCHARS(s, to) from_sixbit((char *)ais_context->bits, s, sizeof(to), to)

ais->type = UBITS(0, 6);

ais->repeat = UBITS(6, 2);

ais->mmsi = UBITS(8, 30);

switch (ais->type) {

case 1: /* Position Report */

case 2:

case 3:

ais->type1.status = UBITS(38, 4);

ais->type1.turn = SBITS(42, 8);

ais->type1.speed = UBITS(50, 10);

ais->type1.accuracy = (bool)UBITS(60, 1);

ais->type1.lon = SBITS(61, 28);

ais->type1.lat = SBITS(89, 27);

ais->type1.course = UBITS(116, 12);

ais->type1.heading = UBITS(128, 9);

ais->type1.second = UBITS(137, 6);

ais->type1.maneuver = UBITS(143, 2);

ais->type1.raim = UBITS(148, 1)!=0;

ais->type1.radio = UBITS(149, 20);

break;

// ...

Where bits.c from gpsd looks like this after I stripped it down and

made it more C99-ish (appologies to ESR  gcc -Os bits.c -std=c99 -c

gcc -Os bits.c -std=c99 -c

#define BITS_PER_BYTE 8

unsigned long long ubits(char buf[], const unsigned int start, const unsigned int width)

{

unsigned long long fld = 0;

for (int i = start / BITS_PER_BYTE; i < (start + width + BITS_PER_BYTE - 1) / BITS_PER_BYTE; i++) {

fld <<= BITS_PER_BYTE;

fld |= (unsigned char)buf[i];

}

unsigned end = (start + width) % BITS_PER_BYTE;

if (end != 0) fld >>= (BITS_PER_BYTE - end);

fld &= ~(-1LL << width);

return fld;

}

signed long long sbits(char buf[], const unsigned int start, const unsigned int width)

{

unsigned long long fld = ubits(buf, start, width);

if (fld & (1 << (width - 1))) fld |= (-1LL << (width - 1));

return (signed long long)fld;

}

Where the result looks like this:

nm bits.o 00000000000000a0 s EH_frame1 0000000000000060 T _sbits 00000000000000e8 S _sbits.eh 0000000000000000 T _ubits 00000000000000b8 S _ubits.eh ls -l bits.c -rw-r--r-- 1 schwehr staff 712 Apr 27 20:37 bits.cThis still would be better as inlines with aggressive optimizations turned on.

The original from gpsd is more like 1148 bytes. Going back into the C/C99/C++ world activates a weird part of my brain... I get crazy about correctness and tweaking things to be just right. When I code python, I have more fun and am much more relaxed.

04.27.2010 08:59

updating PostGIS

I should have followed my own advice last week... think before you

update (and backup your database first)!!! I've been working to hand off some ERMA tasks to the new team

and in the process, I'm walking them through updating fink. Without

people to support the system, I had pretty much left well alone on

server for way more than 1 year. That meant that it was using python

2.5 and an older version of PostGIS. Here you can see the history of

postgis on the machine as I have yet to run fink cleanup to remove

the old debs.

I'll leave it to another time to try to restore the other non-essential databases on that server.

locate postgis | grep debs | grep -v java /sw/fink/debs/postgis82_1.3.2-2_darwin-i386.deb /sw/fink/debs/postgis83_1.3.3-2_darwin-i386.deb /sw/fink/debs/postgis83_1.5.1-2_darwin-i386.deb cd /sw/fink/10.5/local/main/binary-darwin-i386/database && ls -l postgis83_1.3.3-2_darwin-i386.deb -rw-r--r-- 1 root admin 495640 Aug 26 2008 postgis83_1.3.3-2_darwin-i386.debAfter doing the update, the world blew up on me:

psql ais

SELECT ST_astext('POINT EMPTY'); -- dummy NOOP to test PostGIS

ERROR: could not access file "/sw/lib/postgresql-8.3/liblwgeom.1.3.so": No such file or directory

dpkg -L postgis83 | grep lib

/sw/lib/postgresql-8.3/postgis-1.5.so

Whoops! The library name has changed. At this point, I attempted to

upgrade postgis in the ais database by 3 three upgrade scripts and the

postgis.sql script. That was going to be a serious pain. The

upgrades did not work and I had to remove the type definition from

postgis.sql and that seemed risky. Thankfully, the ais database is

not precious at the moment, so I did a dropdb ais to blast it

into the ether. I fixed up my ais-db script to handle the latest

postgis needs and rebuilt the database like this:

ais-db -v -d foo --create-database --add-postgis --create-tables --create-track-lines --create-last-positionAs I have not deleted the old deb, I am thinking through my options to recover my other postgis databases on the server. It wouldn't be the end of the world if these could not come back, but it would be a shame. I think I can do a dump of the old debian and put the necessary shared libs back in place by hand and then do a backup. If not, I might be able to uninstall postgis from the databases and then reinstall it.

file *.deb postgis83_1.3.3-2_darwin-i386.deb: Debian binary package (format 2.0) mkdir foo && cd foo dpkg --extract ../postgis83_1.3.3-2_darwin-i386.deb . du sw 164 sw/bin 476 sw/lib/postgresql-8.3 476 sw/lib 3344 sw/share/doc/postgis83 3344 sw/share/doc 3344 sw/share 3984 swI probably also need these:

ls -1 /sw/fink/debs/libgeos*3.0* /sw/fink/debs/libgeos3-shlibs_3.0.0-2_darwin-i386.deb /sw/fink/debs/libgeos3_3.0.0-2_darwin-i386.deb /sw/fink/debs/libgeosc1-shlibs_3.0.0-2_darwin-i386.deb /sw/fink/debs/libgeosc1_3.0.0-2_darwin-i386.debThere is also a more interesting trick with deb files. It turns out that debs are ar archives (people still use these???). This lets you really see what is in the deb:

ar x ../libgeosc1-shlibs_3.0.0-2_darwin-i386.deb ls -l total 32 -rw-r--r-- 1 schwehr staff 1313 Apr 27 09:21 control.tar.gz -rw-r--r-- 1 schwehr staff 20706 Apr 27 09:21 data.tar.gz -rw-r--r-- 1 schwehr staff 4 Apr 27 09:21 debian-binary tar tf data.tar.gz ./ ./sw/ ./sw/lib/ ./sw/lib/libgeos_c.1.4.1.dylib tar tf control.tar.gz ./ ./control ./package.info ./shlibsHandy when trying to see how things were put together. Strikes me as funny that there are tars embedded in an ar archive. Why not just use tar throughout? Congrats to file for looking closer at the ar archive to see that it's really a debian package.

I'll leave it to another time to try to restore the other non-essential databases on that server.

04.23.2010 12:41

Another example of what a GPS receiver can do

I haven't looked at the details of this vessel, but when parked at the

doc under the Tobin bridge, the GPS feeding its class A AIS position

reports had some really bad position estimates. Outside of that, it

looks to have done a great job. The AIS unit should have broadcast that it did not have a GPS lock in these cases.

I now have ais_decimate_traffic working. It defaults to 200 meters between reports or 10 minutes, which ever comes sooner.

I now have ais_decimate_traffic working. It defaults to 200 meters between reports or 10 minutes, which ever comes sooner.

ais_decimate_traffic.py -f 2009-filtered.db3 -x -71.155 -X -69.36 -y 41.69 -Y 42.795 keep_cnt: 275997 toss_cnt: 897231 outside_cnt: 51287The above image is not filtered, but you can see below that filtering keeps the general sense of when and when the ship was but with about and order of magnitude fewer points.

04.22.2010 22:21

Summer internship at NASA Ames - Digital Lunar Mapping

If you are looking for a fun place to work and interested in really

digging into some good challenges, check out this internship. I have

worked with and in this group for the last 14 years and have to say

that it is an amazing place. Email Terry directly if you are

interested.

SUMMER 2010 UNDERGRADUATE INTERNSHIP (NEW!)

NASA Ames Intelligent Robotics Group

http://irg.arc.nasa.gov

DIGITAL LUNAR MAPPING

Want to help NASA map the Moon? Interested in building high-resolution

digital terrain models (DTM)? The NASA Ames Intelligent Robotics Group

is looking for a summer intern student to help create highly accurate

maps of the lunar surface using computer vision and high-performance

computing. The goal of this work is to provide scientists and NASA

mission architects with better information to study the Moon and plan

future missions.

This internship involves developing methods to compare large-scale

DTMs of the Moon. The work requires some familiarity with the

iterative closest point algorithm (ICP), optimization algorithms such

as gradient descent, and 3D computer vision.

The applicant must be an upper-level undergraduate (junior or senior)

or graduate student in Computer Science, Electrical Engineering or

Mathematics. A solid background in optimization algorithms, strong C++

skills and experience with KD trees is preferred. Experience working

with the NASA Planetary Data System (PDS) is a plus.

Start date: any time

Duration: 3 months, with option to extend into Fall 2010.

TO APPLY

========

US Citizenship is REQUIRED for these internships.

Please send a detailed resume, description of your software experience

& projects, and a short letter describing your research interests to:

terry.fong@nasa.gov

INTELLIGENT ROBOTICS GROUP (IRG)

================================

Since 1989, IRG has been exploring extreme environments, remote locations,

and uncharted worlds. IRG conducts applied research in a wide range of

areas including field mobile robots, applied computer vision, geospatial

data systems, high-performance 3D visualization, and robot software

architecture. NASA Ames is located in the heart of Silicon Valley

and is a world leader in information technology and space exploration.

IRG was formerly known as the Intelligent Mechanisms Group (IMG).

04.22.2010 14:44

Response to Mark Moerman about free software

Warning, I just blasted out this opinion piece since I really need to

be processing data right now rather than this, but it needs at least

some response.

In the May 2010 Digital Ship, Mark Moerman has an opinion piece entitled "How insecure are you?". The article kicks off with this quote:

When it comes to the software and IT that you choose, you need to consider the life cycle of your company. I have been caught too many times by commercial closed software. Just because you yourself don't program yourself does not mean you should blow off open tools. If your company depends on something, do you have the ability to hire someone of your choosing to fix it? If you want to support a tool beyond when the author decides to move on, retires, passes away, or gets bought out bought out by a company that intentionally drops the product? Sometimes the closed solution is the way to go... it has the functionality you can get no other way or the support from the company is stellar. But think about Linux verses Windows. Say you standardize on XP for your company. Now you are facing Microsoft that is pushing hard to get you to Windows 7. If Microsoft totally drops patches for XP and you can't switch, what are you to do? If you had standardized a Linux distribution at the same time that XP came out and still wanted to stay with that distribution and kernel but with the updates that you need that do not break your organization, you can hire someone, put out for bid, etc to get help maintaining that system. There are still people out there on Windows 3.x. I feel for them. With all that, you still find me using Apple computers for my laptop and desktop and I still use Fledermaus (a closed software product) for lots of my visualizations.

And if you care about data-theft and security, why-o-why, are you using closed tools? Demand open systems and pay to get them audited.

Why are we letting windows systems that we have no way of auditing control LNG vessels? I think it is completely nuts to trust these closed systems.

My main message: Think carefully before you choose software and spend your staffs' valuable time on training.

In the May 2010 Digital Ship, Mark Moerman has an opinion piece entitled "How insecure are you?". The article kicks off with this quote:

Small shipbrokers and newcomers to the market could be leaving themselves open to all manner of security risks in their rush to adopt 'free' software and other shortcuts to the IT infrastructure they need, writes Mark Moerman, SDSDand he writes:

... there's no such thing as free, business-quality software or IT services, whether for e-mail or anything else. There will always be hidden costs - such as features that need unlocking - and an overall lack of support.I think his article is both good and really misleading at the same time. He is right that cloud computing has risks, but I think he is also implying that open source software that you do not directly pay is too risky. I have to argue that because some thing is open/closed and free/pay does not mean in any way that it is right for your needs. You have to look at the needs of your organization. In my world, sometimes pay services win out and other times I go with the open source or free world. If for example, going to a closed email system makes you think you are more secure, you are very much mistaken. If you wanted trusted email communication, you need to be using cryptographic signatures (e.g. gnupg). If you want your emails to be private, you must encrypt them. If you don't do those things, it really does not matter about your software or service provider choices.

When it comes to the software and IT that you choose, you need to consider the life cycle of your company. I have been caught too many times by commercial closed software. Just because you yourself don't program yourself does not mean you should blow off open tools. If your company depends on something, do you have the ability to hire someone of your choosing to fix it? If you want to support a tool beyond when the author decides to move on, retires, passes away, or gets bought out bought out by a company that intentionally drops the product? Sometimes the closed solution is the way to go... it has the functionality you can get no other way or the support from the company is stellar. But think about Linux verses Windows. Say you standardize on XP for your company. Now you are facing Microsoft that is pushing hard to get you to Windows 7. If Microsoft totally drops patches for XP and you can't switch, what are you to do? If you had standardized a Linux distribution at the same time that XP came out and still wanted to stay with that distribution and kernel but with the updates that you need that do not break your organization, you can hire someone, put out for bid, etc to get help maintaining that system. There are still people out there on Windows 3.x. I feel for them. With all that, you still find me using Apple computers for my laptop and desktop and I still use Fledermaus (a closed software product) for lots of my visualizations.

And if you care about data-theft and security, why-o-why, are you using closed tools? Demand open systems and pay to get them audited.

Why are we letting windows systems that we have no way of auditing control LNG vessels? I think it is completely nuts to trust these closed systems.

My main message: Think carefully before you choose software and spend your staffs' valuable time on training.

04.21.2010 17:32

FOSS disaster management software

Check out Jon

'maddog' Hall's Picks for Today's Six Best OSS Projects. I just

met John a couple of weeks again when a group of us gave a group

presentation at UNH Manchester (Open

Access, Open Content in Education - PPT).

The talks were long so there wasn't any real room for discussions, but

still it was great to meet the other panel members (and visit UNHM for

the first time).

In Jon's picks, he mentioned Open Street Map (OSM), which is great. I championed OSM for ERMA a while back and it now has OSM as an optional background. Nothing like being unable to mark a bridge out during a disaster or adding emergency roads where the Google team is just not allowed. But better yet, he mentioned Sahana, which is open software for distaster management. It's PHP and MySQL. I will have to check it out! Looks like it has been around quite a while, but of course the open source consultants that I've been working with (hi Aaron) already know about the project and know some of those involved.

In Jon's picks, he mentioned Open Street Map (OSM), which is great. I championed OSM for ERMA a while back and it now has OSM as an optional background. Nothing like being unable to mark a bridge out during a disaster or adding emergency roads where the Google team is just not allowed. But better yet, he mentioned Sahana, which is open software for distaster management. It's PHP and MySQL. I will have to check it out! Looks like it has been around quite a while, but of course the open source consultants that I've been working with (hi Aaron) already know about the project and know some of those involved.

04.21.2010 10:48

IHR paper on AIS Met Hydro

Lee and I have a paper out in the reborn online International

Hydrographic Review (IHR) journal. I am excited to have it be an

online journal where we do not have to worry about the costs of

printing.

New Standards for Providing Meteorological and Hydrographic Information via AIS Application-Specific Messages (PDF)

As always, my papers can be downloaded from: http://vislab-ccom.unh.edu/~schwehr/papers/

New Standards for Providing Meteorological and Hydrographic Information via AIS Application-Specific Messages (PDF)

Abstract AIS Application-specific messages transmitted in binary format will be increasingly used to digitally communicate maritime safety/security information between participating vessels and shore stations. This includes time-sensitive meteorological and hydrographic information that is critical for safe vessel transits and efficient ports/waterways management. IMO recently completed a new Safety-of-Navigation Circular (SN/Circ.) that includes a number of meteorologi- cal and hydrographic message applications and data parameters. In conjunction with the development of a new SN/Circ., IMO will establish an International Application (IA) Register for AIS Application-Specific Messages. IALA plans to establish a similar register for regional appli- cations. While there are no specific standards for the presentation/display of AIS application- specific messages on shipborne or shore-based systems, IMO issued guidance that includes specific mention of conforming to the e-Navigation concept of operation. For both IHO S-57 and S-100-related data dealing with dynamic met/hydro information, it is recommended that IHO uses the same data content fields and parameters that are defined in the new IMO SN/Circ. on AIS Application-specific Messages.Also, Lee just recently presented this paper: Alexander, Schwehr, Zetterberg, Establishing an IALA AIS Binary Message Register: Recommended Process, 17th IALA CONFERENCE. (PDF)

As always, my papers can be downloaded from: http://vislab-ccom.unh.edu/~schwehr/papers/

04.20.2010 16:55

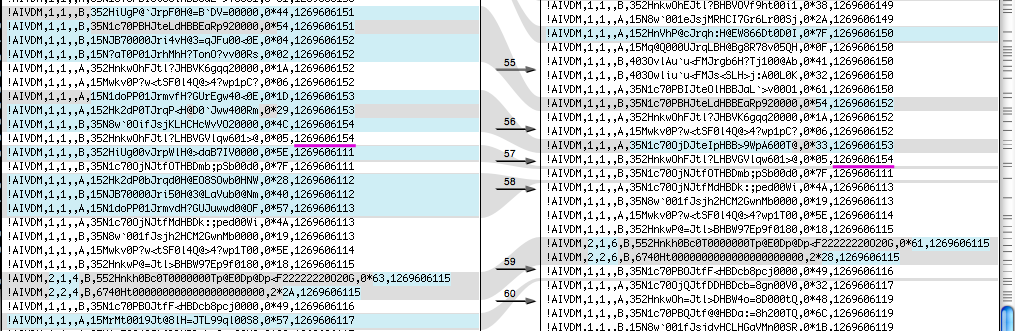

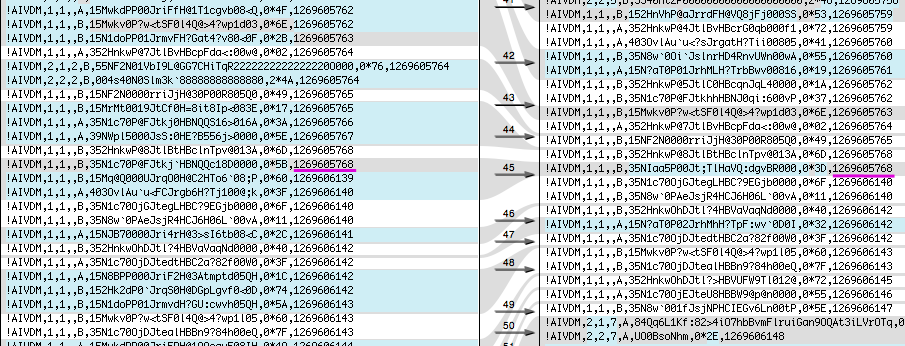

Decoding AIS using C++ bitset

I have reached the point where I have to give up my pure python AIS

library for some of the processing that I am doing. When you have 1TB

of AIS AIVDM NMEA to grind through python using BitVector is just not

going to cut it. This would be the 4th generation of AIS systems at

CCOM.

Matt started off in ~2004 with some really fast code using a whole bunch of macros and bit shifts. Great stuff, but hard to follow.

I thought about ESR's code in GPSD. It looks fast, but I'd like to structure things a bit differently. I would really like to use a bit representation to make adding new types as easy as possible. And I am planning to make a nice C++ interface rather than just C.

I have been looking at a number of C++ libraries. The first was vector<bool>, but it looks like it is not up to the task. It is the odd child of vector and behaves differently than the other vector templates.

Boost's dynamic_bitset and BitMagic look attractive, but both add the requirement to add a dependency that I don't always want to have. Perhaps, I will come back to using one of these two.

For now, I have settled on the C++ standard bitset. It covers the basics and is pretty easy to understand. It does have one major problem - you have to know ahead of time how big your bitset will be. For some applications, this feature of bitset will be a show stopper, but for AIS, we know that messages must be <= 168+4*256 bits long when they come back over NMEA from the modem. I can then make one large bitset to hold them all.

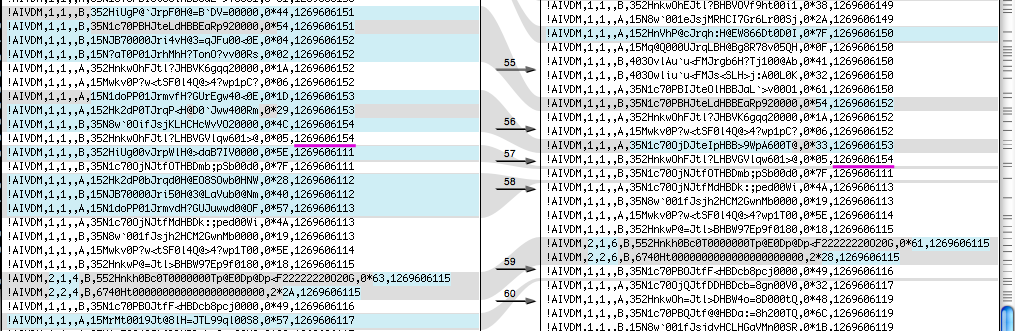

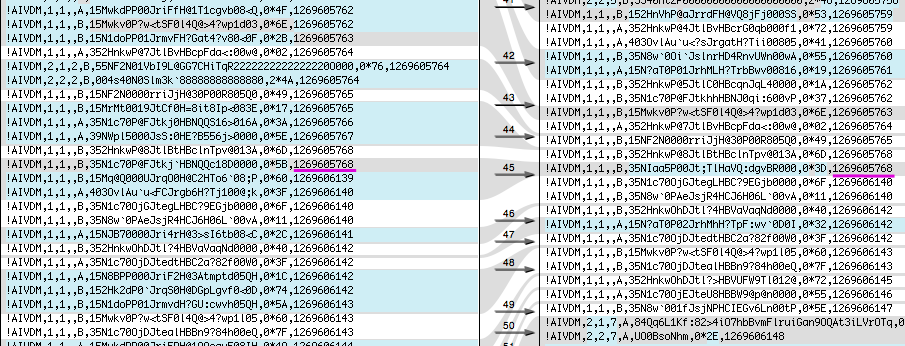

My first program filters 1 sentence NMEA AIS messages based on the MMSI. I use this if I have a list of ships that I am reporting on. Here is the code:

The first thing that I do is build a lookup table of bitset<6> blocks where I can convert each nmea character into 6 bits. build_nmea_lookup fills an array with the bitsets, but as you can see in 43-46, the sense of the bits is opposite of the way that I was thinking when writing this code. A future version should remove the bit reordering that happens at lines 43-45 and line 30.

Lines 67-71 create a set of MMSI numbers to keep. I am trying to use the STL containers as much as possible.

Then in lines 76-85, I loop through each line. On line 79, I am splitting the NMEA on commas so that I can get the binary payload out of the 6th block. After working in python, it feels is so strange that C++ does not come with a split. I got my split functions from StackOverflow: C++: How to split a string? On line 81, I convert the ASCII NMEA payload to a bitset using aivdm_to_bits. This really just boils down to copying bits from the lookup table nmea_ord into the bitset (bs).

Line 82 gets down to the real work of interpretting the bits. decode_mmsi copies the bits out of the messages bs and into a local bits working set. Then to_ulong brings the data back to a C++ unsigned long variable. Pretty easy!

I then check to see if the mmsi is in mmsi_set (line 83). The syntax with find is pretty awkward, having to check against mmsi_set.end().

I did some quick bench marking on this code. Compiled without optimization, it took 15.3s on 526K lines of position messages or 34K lines per second. Turning on optimization ("-O3 -funroll-loops -fexpensive-optimizations"), the program took 1/3 the time to complete the filtering. A typical run was 5.5 seconds for 95K lines/sec. The python version of the code takes 5 minutes and 12s or 1.7K lines/sec. C++ is faster than my python code by a factor of 57. I really like Avi Kak's BitVector library for python, but it is not very fast. I've said it before, a cBitVector would be a great contribution if anyone is looking for a project. The python code even got an optimization that the C++ code did not... I only decode the first 7 NMEA payload characters as that is all I need to get to bit 38.

I am still going to use the python code for my research, but having a reasonable flexible C++ library that is 57 times faster, will be a great help. I could probably even make my code faster and parallized it to get more out of my dual and quad core machines.

Matt started off in ~2004 with some really fast code using a whole bunch of macros and bit shifts. Great stuff, but hard to follow.

I thought about ESR's code in GPSD. It looks fast, but I'd like to structure things a bit differently. I would really like to use a bit representation to make adding new types as easy as possible. And I am planning to make a nice C++ interface rather than just C.

I have been looking at a number of C++ libraries. The first was vector<bool>, but it looks like it is not up to the task. It is the odd child of vector and behaves differently than the other vector templates.

Boost's dynamic_bitset and BitMagic look attractive, but both add the requirement to add a dependency that I don't always want to have. Perhaps, I will come back to using one of these two.

For now, I have settled on the C++ standard bitset. It covers the basics and is pretty easy to understand. It does have one major problem - you have to know ahead of time how big your bitset will be. For some applications, this feature of bitset will be a show stopper, but for AIS, we know that messages must be <= 168+4*256 bits long when they come back over NMEA from the modem. I can then make one large bitset to hold them all.

My first program filters 1 sentence NMEA AIS messages based on the MMSI. I use this if I have a list of ships that I am reporting on. Here is the code:

01: #include <bitset>

02:

03: #include <fstream>

04: #include <iostream>

05: #include <string>

06: #include <sstream>

07: #include <vector>

08: #include <set>

09:

10: using namespace std;

11: const size_t max_bits = 168;

12:

13: vector<string> &split(const string &s, char delim, vector<string> &elems) {

14: stringstream ss(s);

15: string item;

16: while(getline(ss, item, delim)) {

17: elems.push_back(item);

18: }

19: return elems;

20: }

21:

22: vector<string> split(const string &s, char delim) {

23: vector<string> elems;

24: return split(s, delim, elems);

25: }

26:

27: int decode_mmsi(bitset<max_bits> &msg_bits) {

28: bitset<30> bits;

29: for (size_t i=0; i < 30; i++)

30: bits[i] = msg_bits[8 + 29-i];

31: return bits.to_ulong();

32: }

33:

34: bitset<6> nmea_ord[128]; // Direct lookup by the character ord number (ascii)

35:

36: void build_nmea_lookup() {

37: for (int c=0; c < 128; c++) {

38: int val = c - 48;

39: if (val>=40) val-= 8;

40: if (val < 0) continue;

41: bitset<6> bits(val);

42: bool tmp;

43: tmp = bits[5]; bits[5] = bits[0]; bits[0] = tmp;

44: tmp = bits[4]; bits[4] = bits[1]; bits[1] = tmp;

45: tmp = bits[3]; bits[3] = bits[2]; bits[2] = tmp;

46: nmea_ord[c] = bits;

47: }

48: }

49:

50: void splice_bits(bitset<max_bits> &dest, bitset<6> src, size_t start) {

51: for (size_t i=0; i<6; i++) { dest[i+start] = src[i]; }

52: }

53:

54: void aivdm_to_bits(bitset<max_bits> &bits, const string &nmea_payload) {

55: for (size_t i=0; i < nmea_payload.length(); i++)

56: splice_bits(bits, nmea_ord[int(nmea_payload[i])], i*6);

57: }

58:

59: int main(size_t argc, char *argv[]) {

60: build_nmea_lookup();

61: ifstream infile(argv[1]);

62: if (! infile.is_open() ) {

63: cerr << "Unable to open file: " << argv[1] << endl;

64: exit(1);

65: }

66:

67: set<int> mmsi_set;

68: for (size_t i=2; i < argc; i++) {

69: const int mmsi = atoi(argv[i]);

70: mmsi_set.insert(mmsi);

71: }

72:

73: bitset<max_bits> bs;

74: int i;

75: string line;

76: while (!infile.eof()) {

77: i++;

78: getline(infile,line);

79: vector<string> fields = split(line,',');

80: if (fields.size() < 7) continue;

81: aivdm_to_bits(bs, fields[5]);

82: const int mmsi = decode_mmsi(bs);

83: if (mmsi_set.find(mmsi) == mmsi_set.end()) continue;

84: cout << line << "\n";

85: }

86:

87: return 0;

88: }

A quick walk through of the code...

The first thing that I do is build a lookup table of bitset<6> blocks where I can convert each nmea character into 6 bits. build_nmea_lookup fills an array with the bitsets, but as you can see in 43-46, the sense of the bits is opposite of the way that I was thinking when writing this code. A future version should remove the bit reordering that happens at lines 43-45 and line 30.

Lines 67-71 create a set of MMSI numbers to keep. I am trying to use the STL containers as much as possible.

Then in lines 76-85, I loop through each line. On line 79, I am splitting the NMEA on commas so that I can get the binary payload out of the 6th block. After working in python, it feels is so strange that C++ does not come with a split. I got my split functions from StackOverflow: C++: How to split a string? On line 81, I convert the ASCII NMEA payload to a bitset using aivdm_to_bits. This really just boils down to copying bits from the lookup table nmea_ord into the bitset (bs).

Line 82 gets down to the real work of interpretting the bits. decode_mmsi copies the bits out of the messages bs and into a local bits working set. Then to_ulong brings the data back to a C++ unsigned long variable. Pretty easy!

I then check to see if the mmsi is in mmsi_set (line 83). The syntax with find is pretty awkward, having to check against mmsi_set.end().

I did some quick bench marking on this code. Compiled without optimization, it took 15.3s on 526K lines of position messages or 34K lines per second. Turning on optimization ("-O3 -funroll-loops -fexpensive-optimizations"), the program took 1/3 the time to complete the filtering. A typical run was 5.5 seconds for 95K lines/sec. The python version of the code takes 5 minutes and 12s or 1.7K lines/sec. C++ is faster than my python code by a factor of 57. I really like Avi Kak's BitVector library for python, but it is not very fast. I've said it before, a cBitVector would be a great contribution if anyone is looking for a project. The python code even got an optimization that the C++ code did not... I only decode the first 7 NMEA payload characters as that is all I need to get to bit 38.

#!/usr/bin/env python

mmsi_set = set((205445000,

# ...

367381230))

import ais

import sys

for line_num,

line in enumerate(file(sys.argv[1])):

if line_num % 10000 == 0: sys.stderr.write('%d\n' % (line_num,))

bv = ais.binary.ais6tobitvec(line.split(',')[5][:7])

mmsi = int(bv[8:38])

if mmsi in mmsi_set:

print line,

Things to do... I haven't tried my PPC machine to see if this blows up

with endian issues. I also need to decode signed integers and

embedded AIS strings. Then I need to turn this code into a more C++

like interface. I'd like to have a factory setup where I drop in nmea

sentences and get a queue of decoded messages built up. It would be

especially good if it could handle multi-sentence lines with multiple

receives. I also still need to handle NMEA 4.0 tag block sentences in

addition to the older USCG NMEA format that I have been using all

along. I am also guessing that talking directly to sqlite via the C

interface will be faster than the sqlite3 python module. The decoding of AIS

can probably be sped up another 10-15% by inlining and avoiding the whole

split and vector<string> business. I can just identify the right comma

positions and pull the characters out of the NMEA string without creating

a new vector of strings. But as always, first make it work correctly, then

build a fancier and faster design.

I am still going to use the python code for my research, but having a reasonable flexible C++ library that is 57 times faster, will be a great help. I could probably even make my code faster and parallized it to get more out of my dual and quad core machines.

04.16.2010 07:27

AIS receive distances by day

In an effort to understand the quality of the data that I am receiving

from AIS, I have written a tool to do something similar to a 2D

spectrogram. For each day, I binned the received class A messages

into 5km bins out to 200km. I then write out a PGM. The

vertical in the image is distance from the receiver with the receiver

at the bottom. I used a log(bin_count) to bring out the cells with

fewer counts. I am guessing that Boston is the heavy white bar near

the bottom. The code will be in ais_distance.py in noaadata.

04.15.2010 12:17

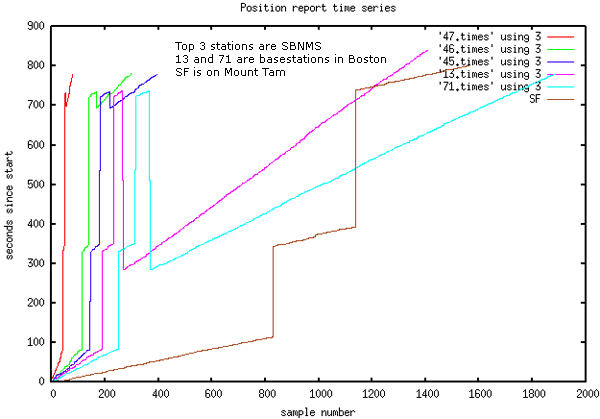

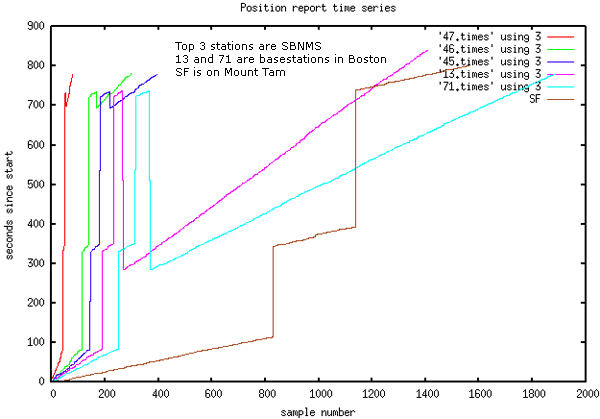

NAIS network delay and clock skew

I have been running an experiment for the last week. On a UNH machine

running NTP, I have been logging NAIS and with each line, I log the

local timestamp. This logging is done by my port-server python code

listening to AISUser and calling time.time() when it receives a line

over TCP. I picked the day with the most data, which turned out to be

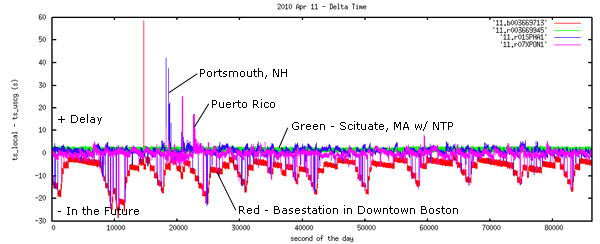

April 11th.

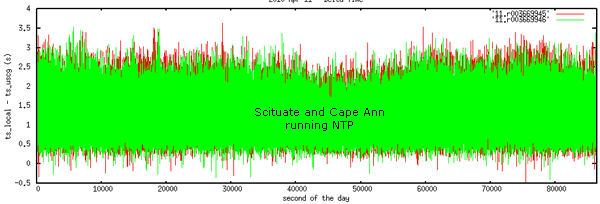

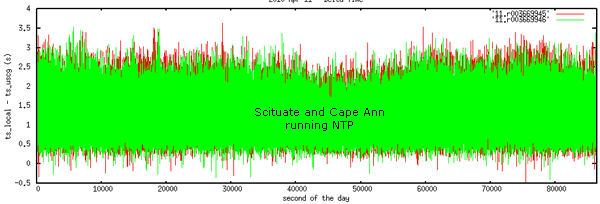

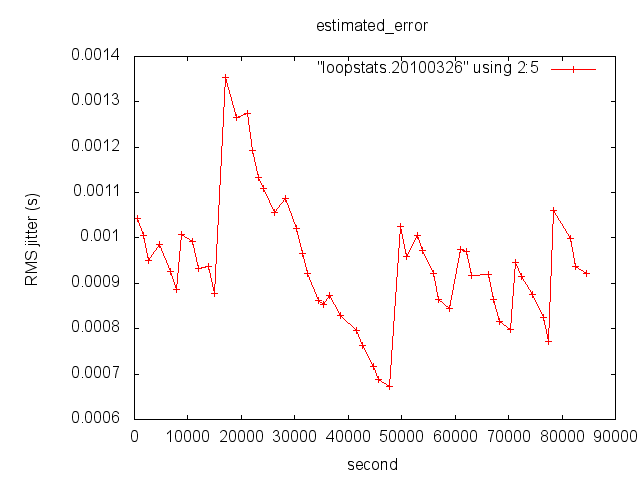

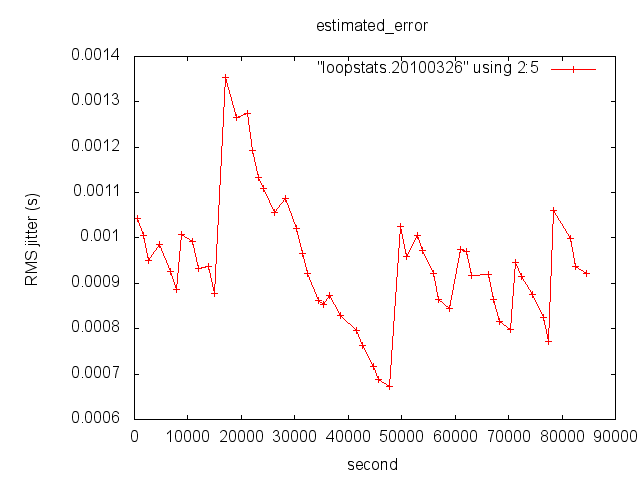

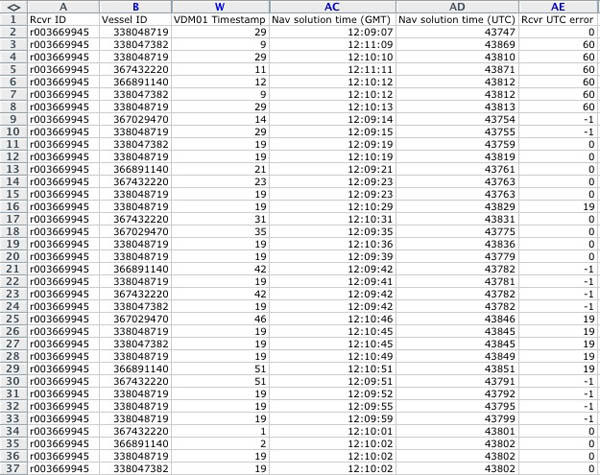

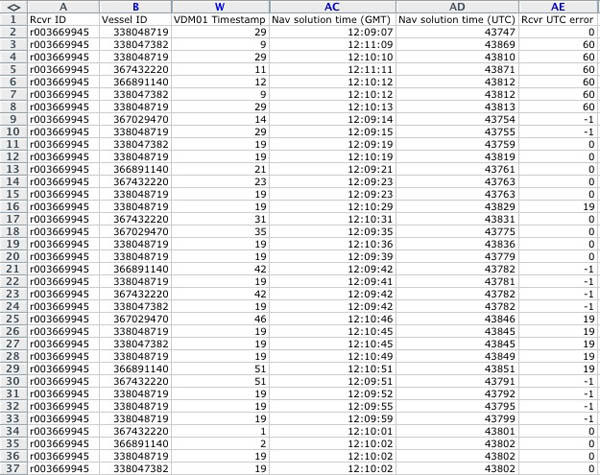

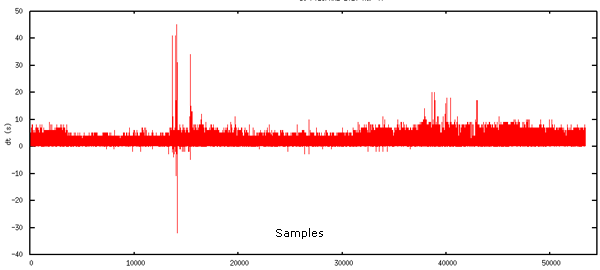

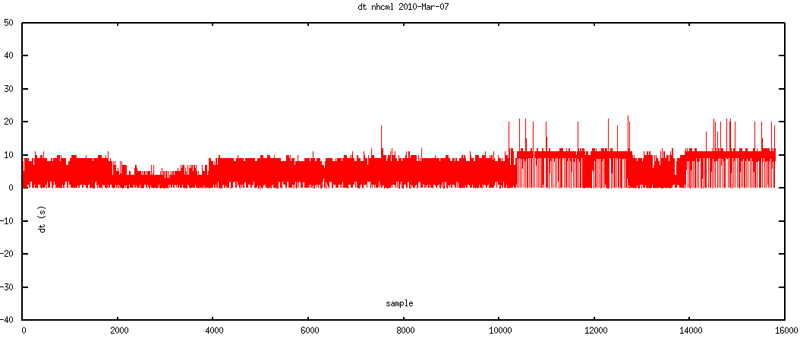

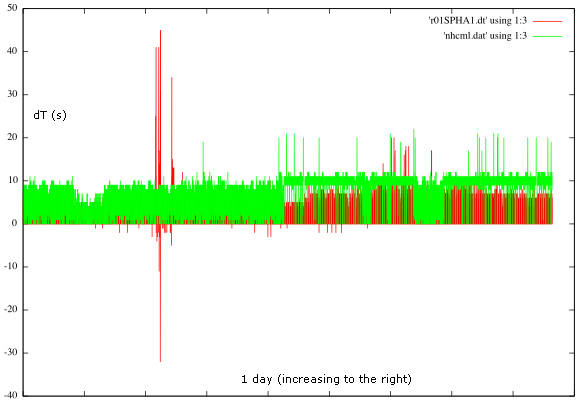

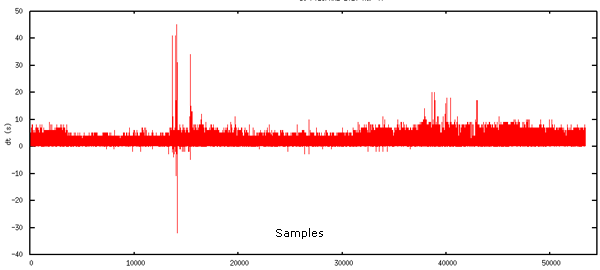

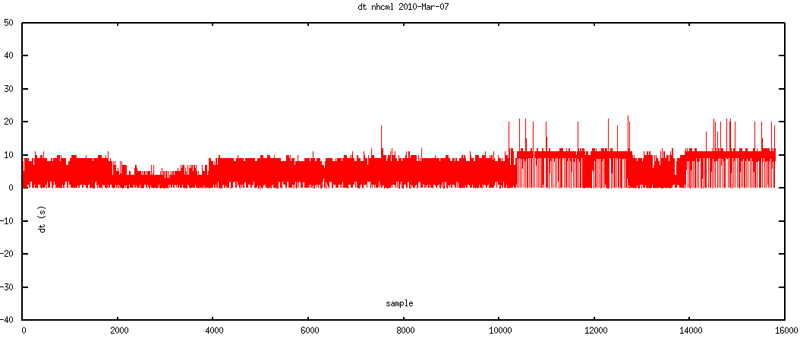

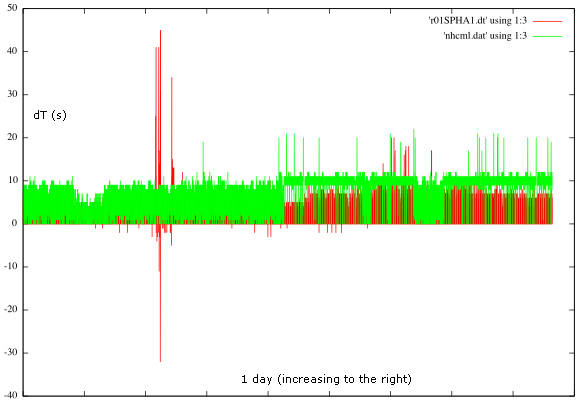

First, take a look at the Scituate and Cape Ann receive stations. These two stations are running NTP. I am plotting the local (UNH) timestamp for when packet gets logged minus the USCG receive station timestamp. If timing were perfect, positive times would be the network delay for the message to travel from the receive station, though the USCG network, and back through the internet to UNH. The width of the graph would be the jitter in the network path. There is a first clue that this is not totally the case in this plot. There are a few times that dip below zero by a quarter second. Times below zero imply packets that were received in the future. NTP should be good to at least 100ms (probably way better), so this is the hint that I am seeing something wrong.

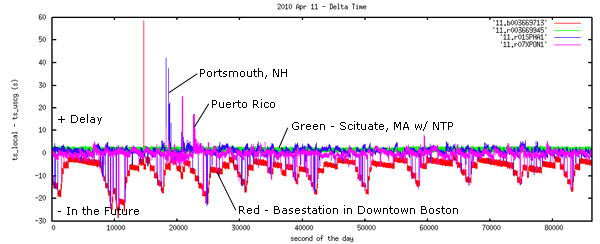

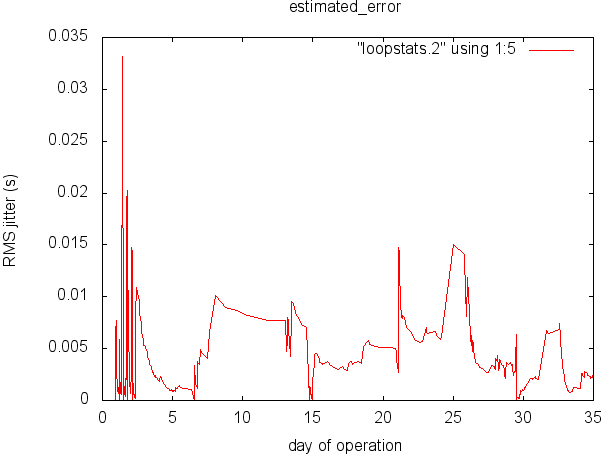

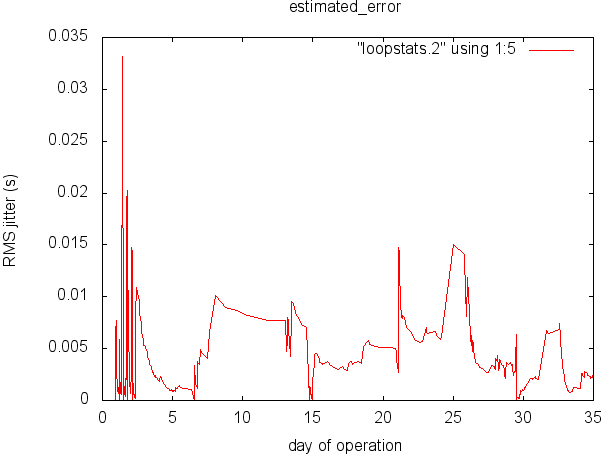

Next, let's take a look at 4 stations. I've included a basestation from downtown Boston, the Scituate receiver for comparison, Portsmouth, NH, because that is the closest station to one of mine (< 100m antenna to antenna), and Peurto Rico (which might have longer network delays). Only Scituate is running NTP. The rest are running W32Time. Boston looks to be consitantly the worst where it is recording messages 10-20s in the future.

These plots tell me that NTP is really helping keep make the situation. A rough guess is that it is taking an averge of 1.3 seconds for a message to go from the receiver back through the NAIS network and to UNH. The basestation's average delta is -7.4 seconds. Combining the basestation info and the delay time from Scituate, I would guess that that I would expect an average error of 8.7 seconds with a standard deviation of 4.5 seconds. When you consider that the time error is non-monotonic and that the full range of the error can be more than a minute and a half (I have seen up to 480 seconds), it makes time very difficult to deal with. I still worry about time with the stations running NTP because of how the AISSource software uses ZDA's to calculate a clock offset once a minute - java and windows issues can inject noticable time problems.

And for completeness, here is the code to make the plot with two sample log lines.

First, take a look at the Scituate and Cape Ann receive stations. These two stations are running NTP. I am plotting the local (UNH) timestamp for when packet gets logged minus the USCG receive station timestamp. If timing were perfect, positive times would be the network delay for the message to travel from the receive station, though the USCG network, and back through the internet to UNH. The width of the graph would be the jitter in the network path. There is a first clue that this is not totally the case in this plot. There are a few times that dip below zero by a quarter second. Times below zero imply packets that were received in the future. NTP should be good to at least 100ms (probably way better), so this is the hint that I am seeing something wrong.

Next, let's take a look at 4 stations. I've included a basestation from downtown Boston, the Scituate receiver for comparison, Portsmouth, NH, because that is the closest station to one of mine (< 100m antenna to antenna), and Peurto Rico (which might have longer network delays). Only Scituate is running NTP. The rest are running W32Time. Boston looks to be consitantly the worst where it is recording messages 10-20s in the future.

These plots tell me that NTP is really helping keep make the situation. A rough guess is that it is taking an averge of 1.3 seconds for a message to go from the receiver back through the NAIS network and to UNH. The basestation's average delta is -7.4 seconds. Combining the basestation info and the delay time from Scituate, I would guess that that I would expect an average error of 8.7 seconds with a standard deviation of 4.5 seconds. When you consider that the time error is non-monotonic and that the full range of the error can be more than a minute and a half (I have seen up to 480 seconds), it makes time very difficult to deal with. I still worry about time with the stations running NTP because of how the AISSource software uses ZDA's to calculate a clock offset once a minute - java and windows issues can inject noticable time problems.

And for completeness, here is the code to make the plot with two sample log lines.

#!/usr/bin/env python

# 2010-Apr-15

'''

!AIVDM,1,1,,A,15N1BigP00JrmgJH?FduI?vT0H:e,0*6A,b003669713,1270944091,runhgall-znt,1270944079.61

!AIVDM,1,1,,B,15N1doPP00JrmvjH?GdIkwv`0@:u,0*34,b003669713,1270944091,runhgall-znt,1270944079.61

'''

from aisutils.uscg import uscg_ais_nmea_regex

ts_min = None

for line_num,line in enumerate(file('11')):

if 'AIVDM' not in line: continue

ts_local = float(line.split(',')[-1])

if ts_min is None:

ts_min = ts_local

try:

match = uscg_ais_nmea_regex.search(line).groupdict()

except AttributeError:

continue

ts_uscg = float(match['timeStamp'])

print '%.2f %.2f %s' % (ts_local - ts_min, ts_local - ts_uscg, match['station'])

04.14.2010 08:29

Healy's science sensors back in the feed

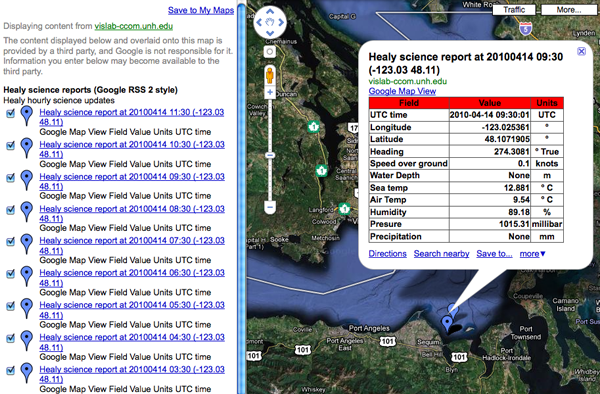

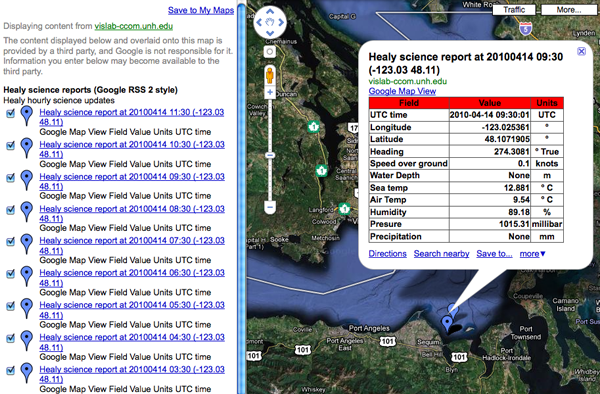

If anyone is interested in a fun dataset to make visualizations from,

I've put a copy of 20100417-healy-sqlite.db3.bz2.

There are 2756 summary reports in the database from 2009-04-28 through to today (with a big hole for the time in dry dock). I would be really excited if someone would create code to produce a visualization using SIMILE Timeplot.

Hopefully, I will get a chance to convert my code to using spatialite

now that BABA has packaged it for fink!

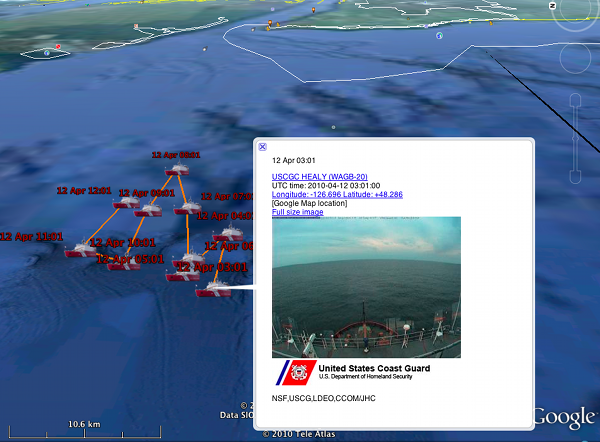

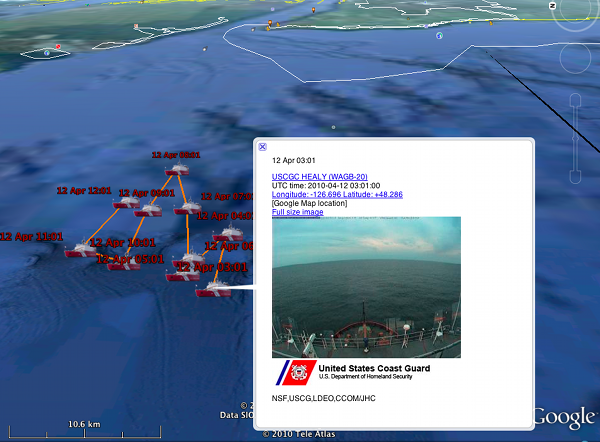

Google Map View

I actually prefer the Google Maps view...

CREATE TABLE healy_summary ( heading REAL, -- ashtech PAT heading in degrees lon REAL, -- GGA position lat REAL, -- GGA position ts TIMESTAMP, -- ZDA depth REAL, -- CTR depth_m in meters air_temp REAL, -- ME[ABC] Deg C humidity REAL, -- ME[ABC] pressure REAL, -- ME[ABC] barometric pressure_mb (milli bar) precip REAL, -- ME[ABC] milimeters sea_temp REAL, -- STA Deg C speed REAL -- VTG speed knots );The science feed now has speed over ground (SOG), sea & air temps, humidity and pressure coming back : healy-science-google.rss e.g.:

Google Map View

| Field | Value | Units |

|---|---|---|

| UTC time | 2010-04-14 00:30:01 | UTC |

| Longitude | -122.9949 | ° |

| Latitude | 48.1889 | ° |

| Heading | None | ° True |

| Speed over ground | 9.6 | knots |

| Water Depth | None | m |

| Sea temp | 12.59 | ° C |

| Air Temp | 12.11 | ° C |

| Humidity | 70.46 | % |

| Presure | 1011.38 | millibar |

| Precipitation | None | mm |

I actually prefer the Google Maps view...

04.13.2010 15:16

python's datetime.datetime and datetime.date

This behavior in python (I only tried 2.6.4) surprised me:

ipython In [1]: import datetime In [2]: d1 = datetime.datetime.utcnow() In [3]: d2 = datetime.date(2010,4,13) In [4]: type d1 ======> type(d1) Out[4]: <type 'datetime.datetime'> In [5]: type d2 ======> type(d2) Out[5]: <type 'datetime.date'> In [6]: isinstance(d1,datetime.datetime) Out[6]: True In [7]: isinstance(d2,datetime.datetime) Out[7]: False In [8]: isinstance(d1,datetime.date) Out[8]: True # Really? A datetime.datetime is an instance of datetime.date? In [9]: isinstance(d2,datetime.date) Out[9]: TrueThere must be some inheritance being implied in there. I don't feel like breaking into this with more powerful python introspection, but I am not up for really exploring this in more detail.

04.13.2010 15:12

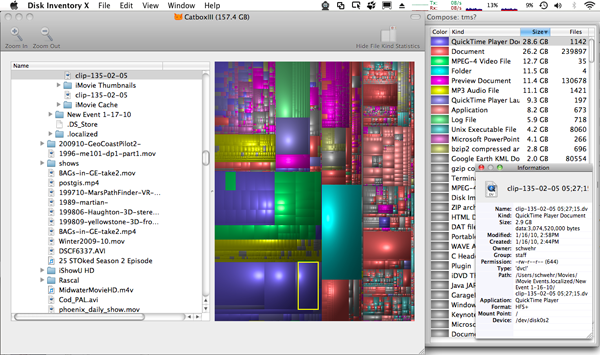

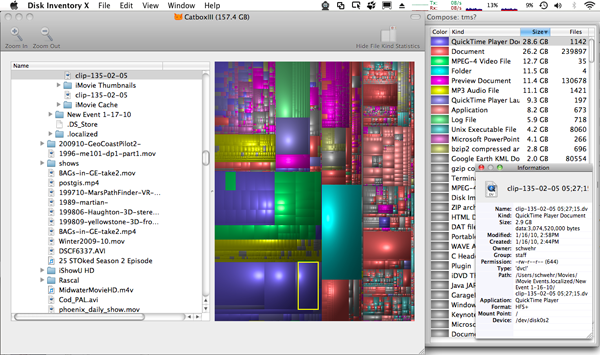

Tracking disk space usage over time on the Mac

We just had a Mac where the disk was suddenly full. I never really

figured out what was chewing up all the disk space, but I learned some

really useful Mac specific tricks in the process after spending a

while with du, df, lsof, find, and ls -a, and xargs. With some help

from the fink community (thanks Jack!), I tried out Disk Inventory X. It takes a while

to plow through your disk, but then is super easy to see what's going on

space wise right now.

Then, I had the thought to use Time Machine to watch the differences between the backups. That should definitely record what just started eating up disk space (assuming it wasn't in part of a disk that was marked to not be backed up). TimeTracker (at charlessoft.com does a great job of giving a quick view into what has changed.

I usually prefer command line ways to work. While tms (described here) looks like an awesome command line utility, the software is closed and appears to be dead. However, in the future, it looks like timedog might be really useful. And timedog is open source perl, so it won't just up and disappear.

See also: 10.5: Compare Time Machine backups to original drive System 10.5 [macosxhints] and my question on the forums

Then, I had the thought to use Time Machine to watch the differences between the backups. That should definitely record what just started eating up disk space (assuming it wasn't in part of a disk that was marked to not be backed up). TimeTracker (at charlessoft.com does a great job of giving a quick view into what has changed.

I usually prefer command line ways to work. While tms (described here) looks like an awesome command line utility, the software is closed and appears to be dead. However, in the future, it looks like timedog might be really useful. And timedog is open source perl, so it won't just up and disappear.

See also: 10.5: Compare Time Machine backups to original drive System 10.5 [macosxhints] and my question on the forums

04.13.2010 10:49

Healy in Google Earth Oceans layer (again)

Now that I've fixed my feed code for the Healy, Google has there side

back in action. The Healy is now updating in the Oceans layer of

Google Earth!

04.12.2010 14:48

Processing large amounts of AIS information

I just got a request Bill Holton for thoughts about how to go about

tackling large amounts of AIS vessel position information using

noaadata. This fits in well with a paper that I am working on right now.

Consider using something other than noaadata. My software is research code and I have some very fast computers that optimize my time rather than the compute power required. Alternatives include (but are not limited to) GPSD, devtools/ais.py in GPSD if you want to stick with pure python, FreeAIS, etc. You can purchase a C library to parse AIS. Or better yet, write your own if you are coder.

If you are sticking with noaadata, first remove all messages that do not matter. You can use egrep 'AIVDM,1,1,,[AB],[1-3]' to extract only the position messages. Then consider running ais_nmea_remove_dups.py with a lookback of something like 5-10K lines (-l 10000). Finally, consider using ais_build_sqlite rather than ais_build_postgis if you don't need PostGIS. sqlite is definitely faster for just crunching raw numbers of lines. Also, make sure that you replace BitVector.py in the ais directory with the latest version as Avi has released 1.5 that is much faster.

If you want to stay with noaadata and are willing to write a little bit of python, you might consider looking in ais_msg_1.py and just converting the minimum number of NMEA characters and just decoding the fields you need.

Remember to be very careful about using raw points from AIS. There are all sorts of aliasing issues that come from transmission rates as ships move differently, aspect oriented transmission errors (e.g. smoke stacks clocking VHF), propagation changes, timestamp errors (or lack of timestamping), ships with bad GPSs, ships with bogus MMSI's, receiving the same message from multiple stations, and so on.

... I have downloaded the noaadata-py tools you have graciously put together and have the code working to import the data into Postgres\Postgis. However, I do have a question regarding the most efficient way to import the data and was hoping you might be able to lead me in the right direction. I need to import the coordinates and ship type from all stations to produce ship counts per grid cell. However, it is taking over 24 hours to import a single day into the database using the ais-process-day.bash script. I have also tried generating a csv file of just the information I need from the rawdata, but it was taking longer than 24 hours to convert a single day of rawdata. This would not be an issue if I was only working with a few days of data, but the project requires analysis on a year's worth of data. Do you have any suggestions for importing the data more effectively?Here are some thoughts on how to get through the data more efficiently and some of the processing issues that come up:

Consider using something other than noaadata. My software is research code and I have some very fast computers that optimize my time rather than the compute power required. Alternatives include (but are not limited to) GPSD, devtools/ais.py in GPSD if you want to stick with pure python, FreeAIS, etc. You can purchase a C library to parse AIS. Or better yet, write your own if you are coder.

If you are sticking with noaadata, first remove all messages that do not matter. You can use egrep 'AIVDM,1,1,,[AB],[1-3]' to extract only the position messages. Then consider running ais_nmea_remove_dups.py with a lookback of something like 5-10K lines (-l 10000). Finally, consider using ais_build_sqlite rather than ais_build_postgis if you don't need PostGIS. sqlite is definitely faster for just crunching raw numbers of lines. Also, make sure that you replace BitVector.py in the ais directory with the latest version as Avi has released 1.5 that is much faster.

If you want to stay with noaadata and are willing to write a little bit of python, you might consider looking in ais_msg_1.py and just converting the minimum number of NMEA characters and just decoding the fields you need.

Remember to be very careful about using raw points from AIS. There are all sorts of aliasing issues that come from transmission rates as ships move differently, aspect oriented transmission errors (e.g. smoke stacks clocking VHF), propagation changes, timestamp errors (or lack of timestamping), ships with bad GPSs, ships with bogus MMSI's, receiving the same message from multiple stations, and so on.

04.12.2010 10:12

nowCOAST WMS feeds now public (and on the iPhone)

The NOAA nowCOAST team has been hard at work and have just been

allowed to make their WMS feeds public! On ERMA, we have been using

these feeds for quite a while and they are fantastic. The details are

on the nowCOAST Map

Services page

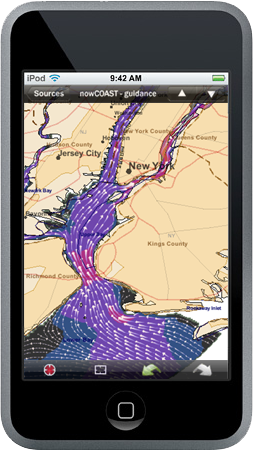

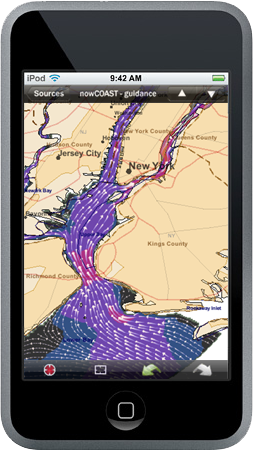

Thanks to Jason for this image of Colin Ware's FlowViz (Colin runs the Vis Lab at CCOM) being fed through nowCOAST, exported as WMS, and viewed on the iPhone via WhateverMap. The iPhone specific instructions are here: Connecting to nowCOAST's WMS from an iPhone or iPod Touch

The announcement:

Thanks to Jason for this image of Colin Ware's FlowViz (Colin runs the Vis Lab at CCOM) being fed through nowCOAST, exported as WMS, and viewed on the iPhone via WhateverMap. The iPhone specific instructions are here: Connecting to nowCOAST's WMS from an iPhone or iPod Touch

The announcement:

nowCOAST WMS Announcement March 31, 2010 On March 31st, NOAA/National Ocean Service enabled OpenGIS Web Map Service (WMS) access to the map layers of NOAA's nowCOAST (http://nowcoast.noaa.gov), a GIS-based web mapping portal to surface observations and NOAA forecast products for U.S. coastal areas. A WMS is a standards-based web interface to request geo-registered map images for use by desktop, web, and handheld device clients (e.g. iPod). The nowCOAST WMS is a continuing effort to support NOAA's IT goals to "create composite geospatial data products that span NOAA Line and Program office missions" and "remove the physical barriers to geospatial data access with NOAA". WMS is a recommended web service of NOAA Integrated Ocean Observing System Office's (IOOS)Data Integration Framework. The nowCOAST WMS are separated into six different services, grouped by product or layer type. The six services are observations (obs), analyses, guidance, weather advisories, watches, warnings (wwa), forecasts, and geo-referenced hyperlinks (geolinks). The "obs" service contains near real-time observation layers (e.g. weather radar mosaic; GOES satellite cloud imagery; surface weather/ocean observations). The "analyses" service contains gridded weather and oceanographic analysis layers (e.g. global SST analysis; surface wind analyses). The "guidance" service provides nowcasts from oceanographic forecast modeling systems for estuaries and Great Lakes and coastal ocean operated by NOAA and U.S. Navy. The "wwa" service contains watches, warnings and advisory layers (e.g. tropical cyclone track & cone of uncertainty, short-duration storm-based warnings). The "forecast" service includes gridded surface weather and marine forecast layers (e.g. National Digital Forecast Database's surface wind and significant wave forecasts). The "geolinks" service contains nowCOAST's geo-referenced hyperlinks to web pages posting near-real-time observations and forecast products. These six services can be found at http://nowcoast.noaa.gov/wms/com.esri.wms.Esrimap/name_of_service - where 'name of service' is one of the following: obs, analyses, guidance, wwa, forecasts or geolinks. For example, http://nowcoast.noaa.gov/wms/com.esri.wms.Esrimap/guidance contains forecast guidance from NOS and Navy oceanographic forecast modeling systems. For further information about nowCOAST WMS and how to use it, please see http://nowcoast.noaa.gov/help/mapservices.shtml?name=mapservices nowCOAST integrates near-real-time surface observational, satellite imagery, forecast, and warning products from NOS, NWS, NESDIS, and OAR and provides access to the information via its web-based map viewer and mapping services. nowCOAST was developed and is operated by NOS' Coast Survey Development Laboratory.

04.12.2010 09:31

Home made bacon

Andy and I went over to the butcher's workshop yesterday out in

Pittsfield to pick up the results from two pigs. Monica and I are

trying our hands at home made bacon. We've started with 1/3rd of the

port belly shown here...

04.12.2010 09:11

NTP and DDS

I'm not sure how I missed Dale's blog posts about these two topics and

his links to the Dr.Dobb's articles...

The first, Dale's Linux time keeping points to an article on NTP: Testing and Verifying Time Synchronization - NTP versus TimeKeeper. I see no mention of iburst in the article, which would have likely caused the system to get the systems syncronized much quicker. Would have been nice if he had posted the code he used for the article.

The second is Dale's OMG Real-time Data Distribution Service (DDS). I spent a number of years in the late 90's working on the parent to this standard called Network Data Delivers Service (NDDS) by RTI. Dale points to this Dr.Dobbs article: The Data Distribution Service for Real-Time Systems: Part 1 - DDS is the standard for real-time data distribution. DDS is pretty powerful stuff.

The first, Dale's Linux time keeping points to an article on NTP: Testing and Verifying Time Synchronization - NTP versus TimeKeeper. I see no mention of iburst in the article, which would have likely caused the system to get the systems syncronized much quicker. Would have been nice if he had posted the code he used for the article.

The second is Dale's OMG Real-time Data Distribution Service (DDS). I spent a number of years in the late 90's working on the parent to this standard called Network Data Delivers Service (NDDS) by RTI. Dale points to this Dr.Dobbs article: The Data Distribution Service for Real-Time Systems: Part 1 - DDS is the standard for real-time data distribution. DDS is pretty powerful stuff.

04.12.2010 08:55

Healy back underway

The USCGC ice breaker Healy is back underway. The feed is not yet

back in the Oceans layers of Google Earth, but you can download the updating

kml and watch the Healy getting back into shape after being in the

ship yard. The feeds are online

and you are welcome to check out the healy-py

source code. The science gear isn't online and the images may not

always be updating as the team works on the systems. I just do the

visualizations, it is the LDEO folks who do all the

work.

04.09.2010 13:23

another CCOM blogger!

Jonathan Beaudoin has just joined CCOM and today I found out that in

addition to being a new Mac user, he has a blog!

2bitbrain.blogspot.com

2bitbrain.blogspot.com

04.08.2010 19:32

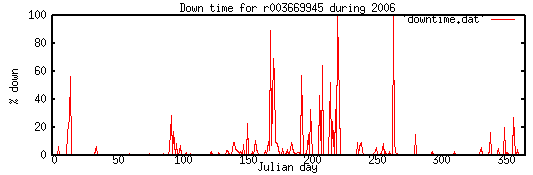

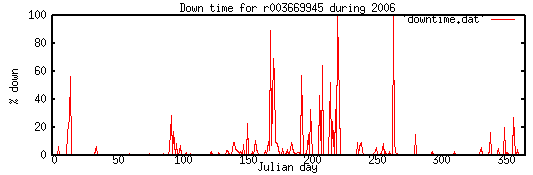

Station downtime by day for a year

ais_info.py can now create a graph of % down time for a year. This is

based on a 6 minute gap or larger indicating system down time. The command

line options will likely be a bit different when I cleanup the code.

ais_info.py --min-gap=360 2006-r45.log

04.08.2010 17:25

simply python generator for date ranges

One thing that is a bit weird with python's datetime module is that it

is hard to interate over a range of days. I would think that this

should be trivial, but I can't find the simple pythonic solution.

However, this does give me a chance to create a "generator" in python

that is much simpler than the ones I've created in the past.

Hopefully everyone can follow this one.

#!/usr/bin/env python

# Python 2.4 or newer

import datetime

def date_generator(start_date, end_date):

cur_date = start_date

dt = datetime.timedelta(days=1)

while cur_date < end_date:

yield cur_date

cur_date += dt

for d in date_generator(datetime.datetime(2006,11,27), datetime.datetime(2006,12,2)):

print d

print 'case 2'

for d in date_generator(datetime.datetime(2006,12,27), datetime.datetime(2007,1,2)):

print d

# Alternative without a generator:

start_date = datetime.date(2006,12,29)

end_date = datetime.date(2007,1,2)

for a_date in [datetime.date.fromordinal(d_ord) for d_ord in range(start_date.toordinal(), end_date.toordinal()) ]:

print a_date

The results of running this wrap nicely over month and year boundaries.

2006-11-27 00:00:00 2006-11-28 00:00:00 2006-11-29 00:00:00 2006-11-30 00:00:00 2006-12-01 00:00:00 case 2 2006-12-27 00:00:00 2006-12-28 00:00:00 2006-12-29 00:00:00 2006-12-30 00:00:00 2006-12-31 00:00:00 2007-01-01 00:00:00 2006-12-29 # This is using datetime.date without a generator 2006-12-30 2006-12-31 2007-01-01For other examples of iterating over date ranges, take a look at: Iterating through a range of dates in Python [stack overflow]

04.08.2010 13:49

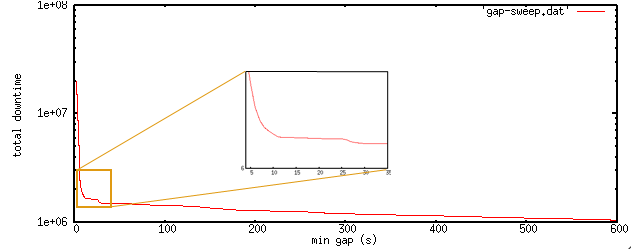

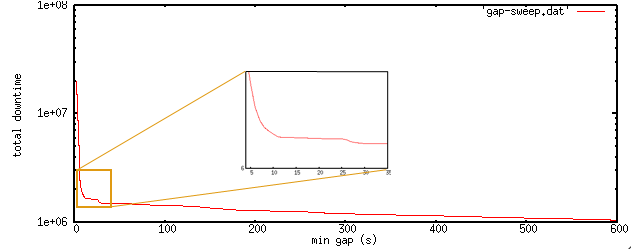

Downtime estimate with minimum gap time

Looking at the estimate of downtime, it is primarily controlled by the

amount of time with no messages that I consider as being offline. I am

looking at a threshold somewhere under 10 minutes of no receives. There

should pretty much always be a vessel or basestation up and broadcasting

in the Boston area. I figured the best way to make sure that my 5

minute first guess made sense was to see how the minimum gap time

changed the downtime estimate. As the minimum gap starts increasing,

there are some really huge shifts in the estimated downtime. Here are

the first few values:

I am going with 6 minutes as my threshold for being offline for these NAIS stations. For a receiver in a busy port with proper time control, that a threshold in the range of 21 to 60 seconds. If there is a basestation in the area, ITU 1371-3 says that it should be broadcasting every 10 seconds (Table 2, page 4). If you can consistantly hear that basestation, then 21 seconds should be good to allow for occasionally missing single msg 4 basestation reports.

2 19862872 3 11486556 4 6576780 5 3933032 6 2729717 7 2206115 8 1974632 9 1843744 10 1766929Going from 2 to 8 seconds drops the estimated downtime from 19M seconds down an order of magnitude to 2M seconds. Here is a log plot showing that there are inflection points at 12 seconds and 26-30 seconds. I am not sure what that says about the system, but here are number from times that I think might make sense in terms of timings:

min sec downtime uptime reason 1.5 90 1440037 95.4% Maximum negative time jump seen 3 180 1301964 95.9% Class A/B retransmit when at anchor 5 300 1211944 96.2% Kerberos max time spread for W32Time 6 360 1169338 96.3% Kerb 5 minutes + 1 minute for AISSource time offset 8 480 1117793 96.5% Timing issue seen with Hawkeye basestations 9 540 1082542 96.6% Hawkeye timing issue + 1 minute for AISSource time offsetThat gives me a sense that this station was operational somewhere in the range of 95.4% to 96.6% of the year. With three partially overlapping stations, the hope would be that for the area of the SBNMS, the uptime would be even greater. Accept for major storm events, at least one of the stations would will be up with both Cape Ann and Cape Cod being more prone to go down. However, without good time correlation between the stations, I am not going to bother attempting the analysis of overall uptime. I will just stick to by station statistics.

I am going with 6 minutes as my threshold for being offline for these NAIS stations. For a receiver in a busy port with proper time control, that a threshold in the range of 21 to 60 seconds. If there is a basestation in the area, ITU 1371-3 says that it should be broadcasting every 10 seconds (Table 2, page 4). If you can consistantly hear that basestation, then 21 seconds should be good to allow for occasionally missing single msg 4 basestation reports.

04.08.2010 10:58

Yet more on time with Windows and Java

BRC, AndyM, Jordan, and I have been bouncing some emails back and

forth about timing, and I have a few final notes before I continue on

with my AIS analysis. First some notes about W32time. If I had more

energy on this topic, I would turn on logging for a Windows machine

running W32time and see how it was actually slewing the clock.

Experiences by others in the past have indicated that, "because it's

running SNTP rather than full XNTP (now called just NTP now that NTP is at version 4.x, right?), the corrections can also be far

apart and radical". There is a MSDN blog post on Configuring

the Time Service: Enabling the Debug Log. I could then look at

frequency and size of the time changes. There is also a blog post on

technet, High

Accuracy W32time Requirements, that explains why W32time does not

support high accuracy timing. In that article, I find the source of

the 5 minute jumps:

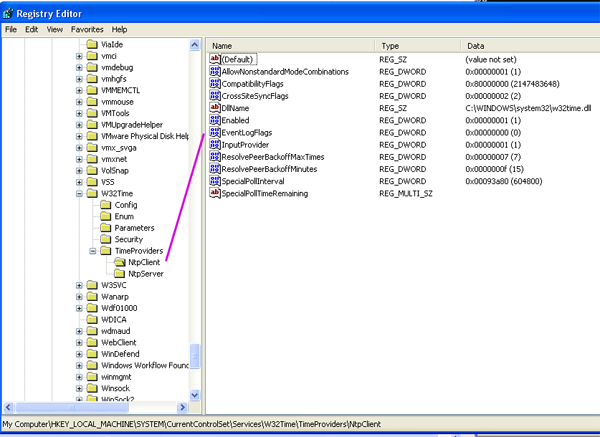

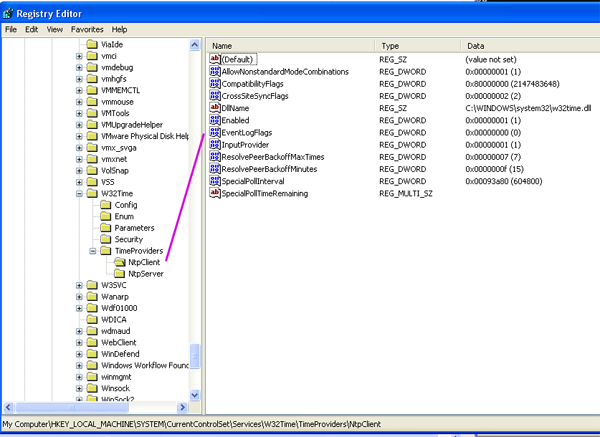

You can also explore these parameters using regedit:

The question after that is, how well does Windows XP running Meinberg's NTP software (me installing it) do in setting the clock?

Time keeping and updating on a computer can be altered by the quality of the power and power supply unit, operating temperature of the oscillator (is it near RAM, graphics chips, or CPU?), system load (can it service all the interups quickly?), load of the network delivering the time signal (in the case of NTP), and a number of other parameters.

Next comes a discussion of how well the system can tag time. There will be delays in serial transmission, the system UART, task swapping of the process doing the logging, and how well the logging software performs. Up to the actual logging software, we are likely looking at up to 100ms (guestimate) of delay. A nice feature of the ShineMicro receivers is that they can have a feature where they timestamp the message on the receiver. I don't have one myself, so I can't comment on the details, but I have seen the USCG NMEA metadata for them.

The comes the Java runtime issues (similar issues are likely to occur with python). Java is an automatic garbage collecting language (GC), unlike C, where the author of the code has to explicitly allocate and deallocate memory. This means that the Java virtual machine can just up and decide to up and collect garbage. This issue has plagued many systems and NASA spent well over $10M having a realtime LISP system built for the Deep Space 1 probe (DS1) Remote Agent software. There is a paper worth reading that Jordan pointed me to for Java: Validating Java for Safety-Critical Applications by Jean-Marie Dautelle. To quote one of the footnotes:

With the introduction to W32Time, the W32Time.dll was developed for

Windows 2000 and up to support a specification by the Kerberos V5

authentication protocol that require clocks on a network to be

"synchronized". I'll save you from the very long (but interesting)

read on RFC 1510 Kerberos documentation and pull out what is relevant.

RFC 1510: "If the local (server) time and the client time in the

authenticator differ by more than the allowable clock skew (e.g.,

5 minutes), the KRB_AP_ERR_SKEW error is returned."

With that, I want to at least document how to start looking at the settings

on Windows. I don't use Windows very often, so I always have to ask others

how to do this. Thanks to Jordan for the instructions.

Run -> cmd -> <enter>

reg query HKLM\SYSTEM\CurrentControlSet\Services\W32Time\Parameters /s

! REG.EXE VERSION 3.0

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\W32Time\Parameters

ServiceMain REG_SZ SvchostEntry_W32Time

ServiceDll REG_EXPAND_SZ C:\WINDOWS\system32\w32time.dll

NtpServer REG_SZ time.windows.com,0x1

Type REG_SZ NTP

reg query HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\W32Time\Config

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\W32Time\Config

LastClockRate REG_DWORD 0x2625a

MinClockRate REG_DWORD 0x260d4

MaxClockRate REG_DWORD 0x263e0

FrequencyCorrectRate REG_DWORD 0x4

PollAdjustFactor REG_DWORD 0x5

LargePhaseOffset REG_DWORD 0x138800

SpikeWatchPeriod REG_DWORD 0x5a

HoldPeriod REG_DWORD 0x5

MaxPollInterval REG_DWORD 0xf

LocalClockDispersion REG_DWORD 0xa

EventLogFlags REG_DWORD 0x2

PhaseCorrectRate REG_DWORD 0x1

MinPollInterval REG_DWORD 0xa

UpdateInterval REG_DWORD 0x57e40

MaxNegPhaseCorrection REG_DWORD 0xd2f0

MaxPosPhaseCorrection REG_DWORD 0xd2f0

AnnounceFlags REG_DWORD 0xa

MaxAllowedPhaseOffset REG_DWORD 0x1

I don't know the units on these and it is extra fun that they are in hex.

The parameters can be interpreted by reading way down this MS technet article:

Windows

Time Service Tools and Settings. Just make sure to scroll down a

ways. (Thanks again Jordan!)

You can also explore these parameters using regedit:

Run -> regedit -> <enter>

The question after that is, how well does Windows XP running Meinberg's NTP software (me installing it) do in setting the clock?

Time keeping and updating on a computer can be altered by the quality of the power and power supply unit, operating temperature of the oscillator (is it near RAM, graphics chips, or CPU?), system load (can it service all the interups quickly?), load of the network delivering the time signal (in the case of NTP), and a number of other parameters.

Next comes a discussion of how well the system can tag time. There will be delays in serial transmission, the system UART, task swapping of the process doing the logging, and how well the logging software performs. Up to the actual logging software, we are likely looking at up to 100ms (guestimate) of delay. A nice feature of the ShineMicro receivers is that they can have a feature where they timestamp the message on the receiver. I don't have one myself, so I can't comment on the details, but I have seen the USCG NMEA metadata for them.

The comes the Java runtime issues (similar issues are likely to occur with python). Java is an automatic garbage collecting language (GC), unlike C, where the author of the code has to explicitly allocate and deallocate memory. This means that the Java virtual machine can just up and decide to up and collect garbage. This issue has plagued many systems and NASA spent well over $10M having a realtime LISP system built for the Deep Space 1 probe (DS1) Remote Agent software. There is a paper worth reading that Jordan pointed me to for Java: Validating Java for Safety-Critical Applications by Jean-Marie Dautelle. To quote one of the footnotes:

Major GCs occur regularly producing up to 90 ms delays in execution time.The paper shows that timing can be all over the place and that it really needs to be experimentally determined. It matters which JVM is being used, the system priority for the process, the details of how the program creates/deletes objects, if Just-in-Time compilation (JIT) is being used, and if it is Real-Time Java

04.07.2010 21:19

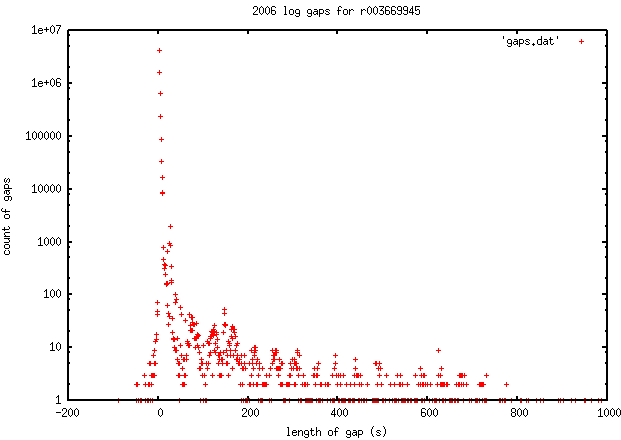

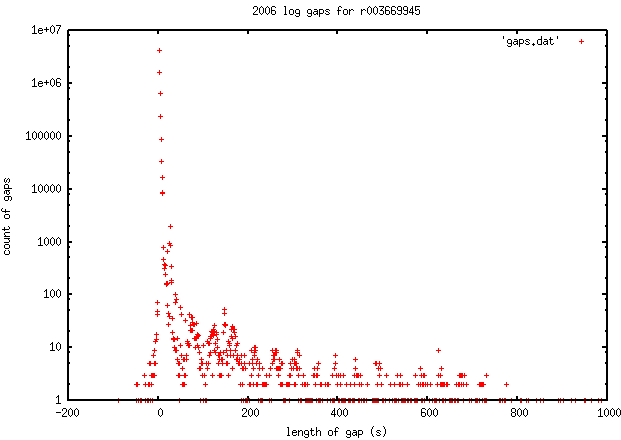

Looking at gaps of time with no messages received for an AIS receiver

I have been looking to create some sort of model of up verses downtime

with AIS data feeds. I am having to base my estimate of downtime on

when I do not have timestamped packets of AIS data. There are a good

number of reasons that I might not have any AIS messages of the VDL

(VHF Data Link):